“≤10-year Timelines Remain Unlikely Despite DeepSeek and o3” by Rafael Harth

Audio note: this article contains 32 uses of latex notation, so the narration may be difficult to follow. There's a link to the original text in the episode description.

[Thanks to Steven Byrnes for feedback and the idea for section §3.1. Also thanks to Justice from the LW feedback team.]

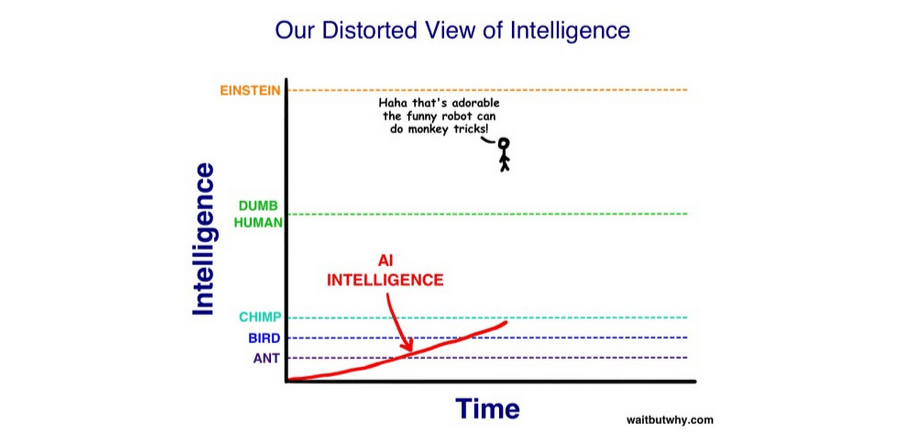

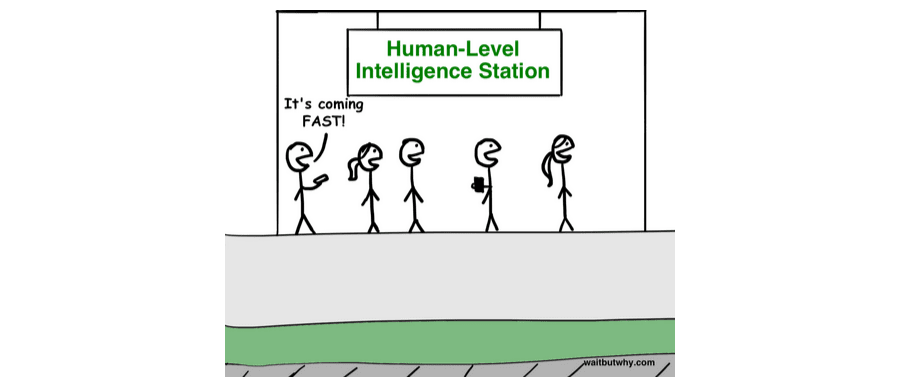

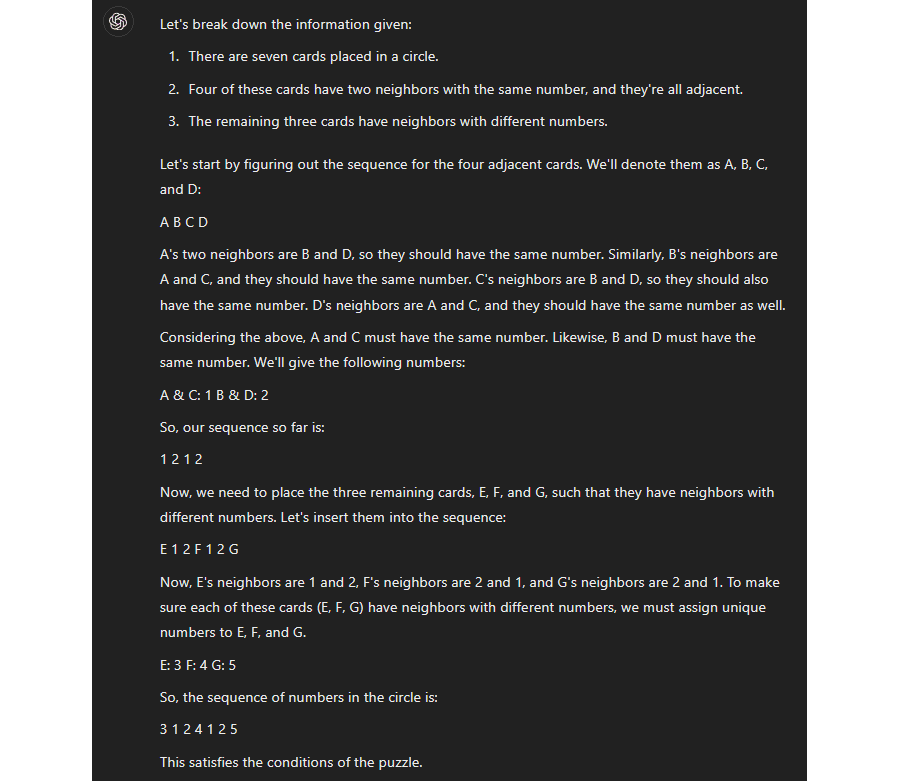

Remember this?

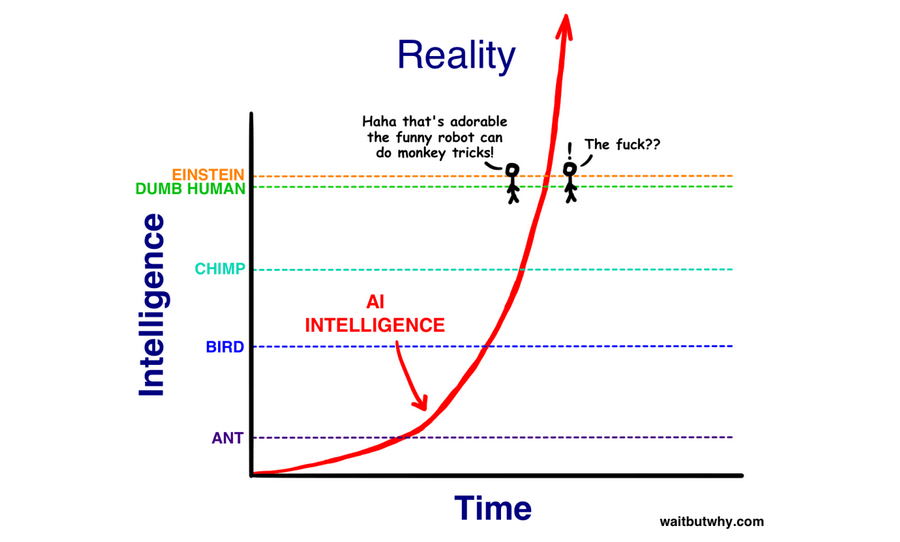

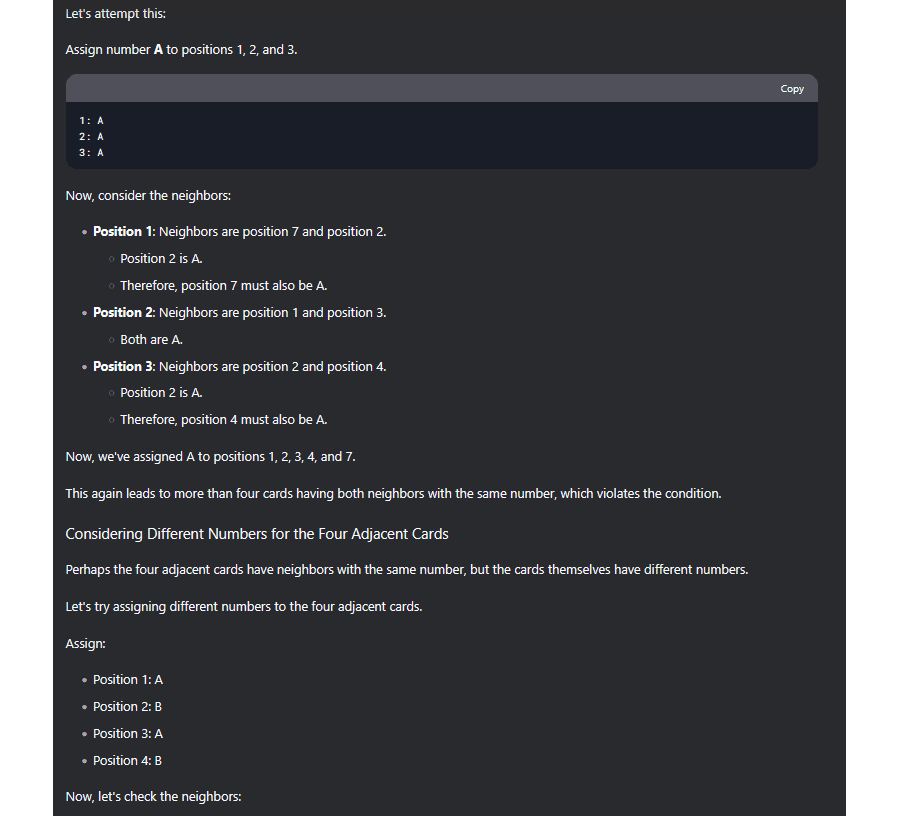

Or this?

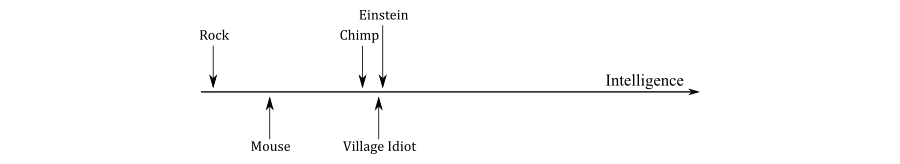

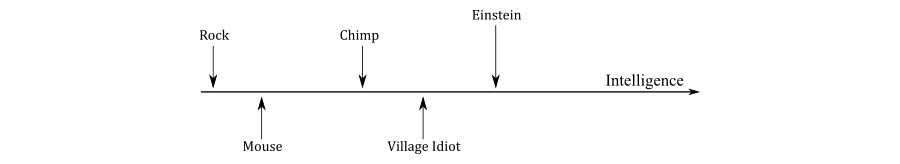

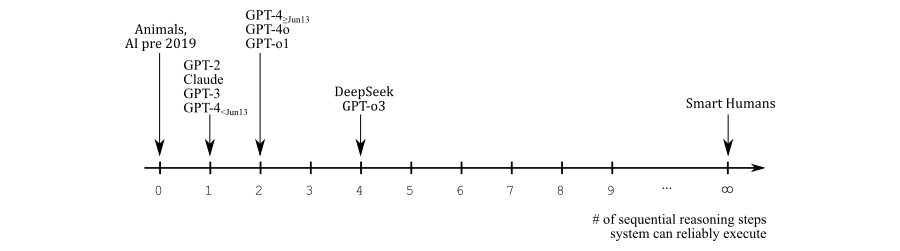

The images are from WaitButWhy, but the idea was voiced by many prominent alignment people, including Eliezer Yudkowsky and Nick Bostrom. The argument is that the difference in brain architecture between the dumbest and smartest human is so small that the step from subhuman to superhuman AI should go extremely quickly. This idea was very pervasive at the time. It's also wrong. I don't think most people on LessWrong have a good model of why it's wrong, and [...]

---

Outline:

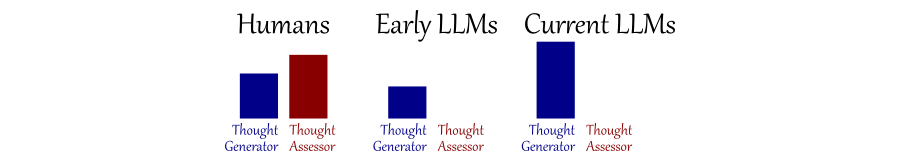

(02:07) 1. Why Village Idiot to Einstein is a Long Road: The Two-Component Model of Intelligence

(06:04) 2. Sequential Reasoning

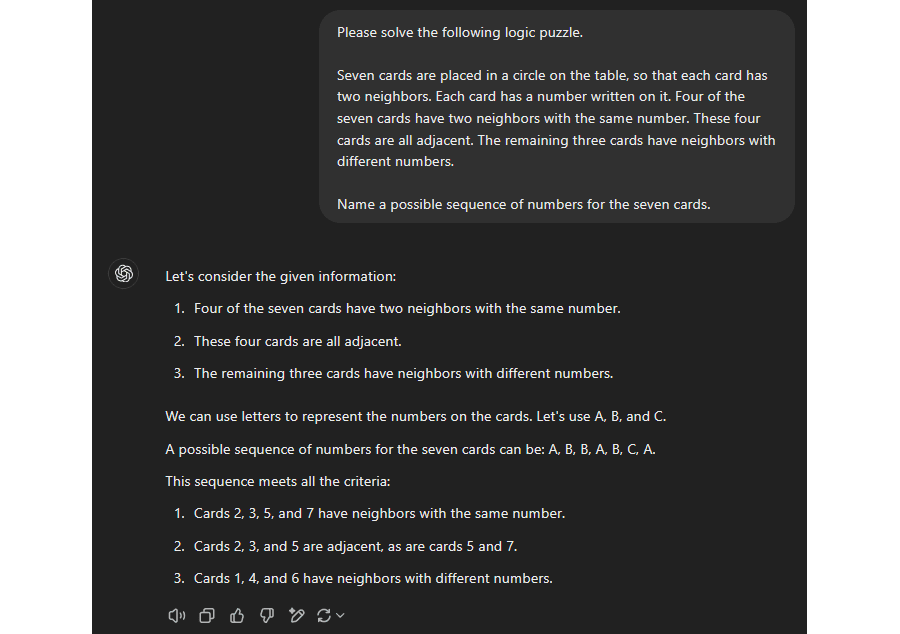

(07:03) 2.1 Sequential Reasoning without a Thought Assessor: the Story so Far

(13:03) 2.2 Sequential Reasoning without a Thought Assessor: the Future

(16:29) 3. More Thoughts on Sequential Reasoning

(16:33) 3.1. Subdividing the Category

(21:09) 3.2. Philosophical Intelligence

(27:17) 4. Miscellaneous

(27:21) 4.1. Your description of thought generation and assessment seems vague, underspecified, and insufficiently justified to base your conclusions on.

(28:08) 4.2. If your model is on the right track, doesnt this mean that human+AI teams could achieve significantly superhuman performance and that these could keep outperforming pure AI systems for a long time?

(29:48) 4.3. What does this mean for safety, p(doom), etc.?

The original text contained 4 footnotes which were omitted from this narration.

---

First published:

February 13th, 2025

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.