“Do reasoning models use their scratchpad like we do? Evidence from distilling paraphrases” by Fabien Roger

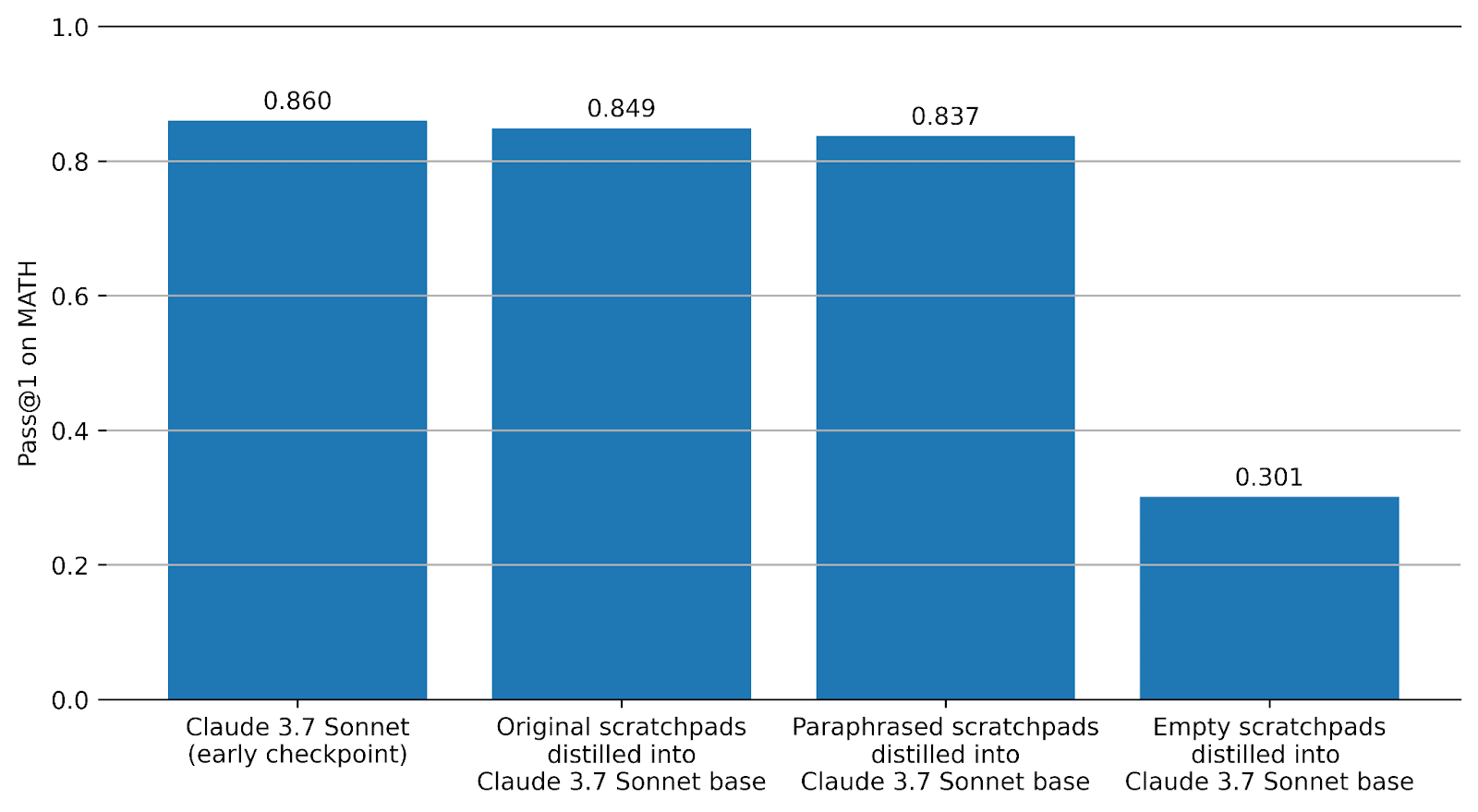

TL;DR: We provide some evidence that Claude 3.7 Sonnet doesn’t encode hidden reasoning in its scratchpad by showing that training it to use paraphrased versions of the scratchpads does not degrade performance.

The scratchpads from reasoning models look human understandable: when reasoning about a math problem, reasoning models consider intermediate steps similar to the ones I would use, backtrack and double-check their work as I would.[1]

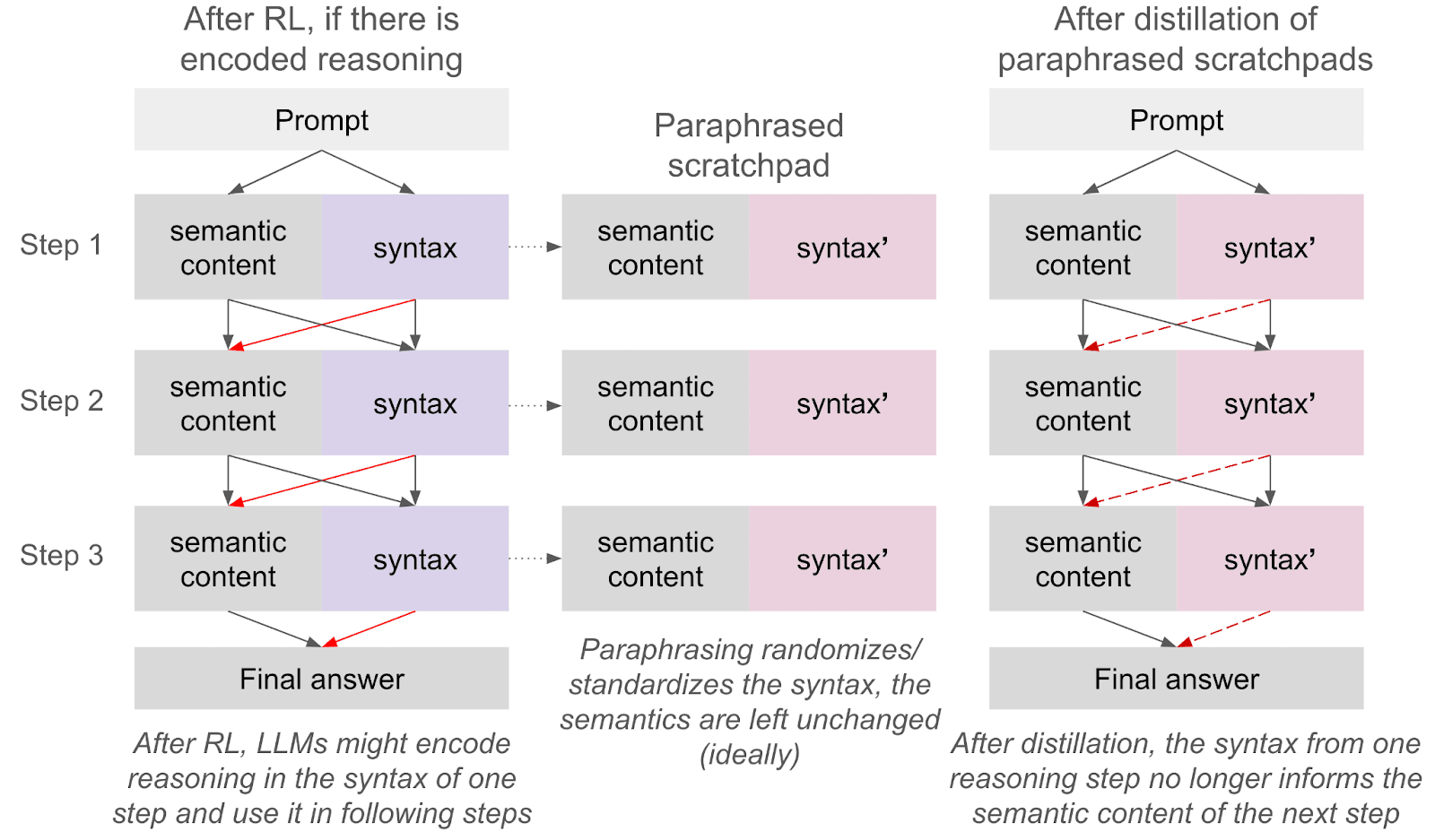

But even if scratchpads look like they perform human-like reasoning, scratchpads might improve performance through some less human-understandable mechanisms. One particularly worrying possibility is that models could encode additional reasoning in syntax of the text (e.g. encoding a bit in using a bulleted vs a numbered list, and then using this bit later in the scratchpad). This is sometimes called encoded reasoning or Chain-of-Thought steganography. If LLMs learned how to use encoded reasoning during RL, they might be able to use it in [...]

---

Outline:

(02:54) More results

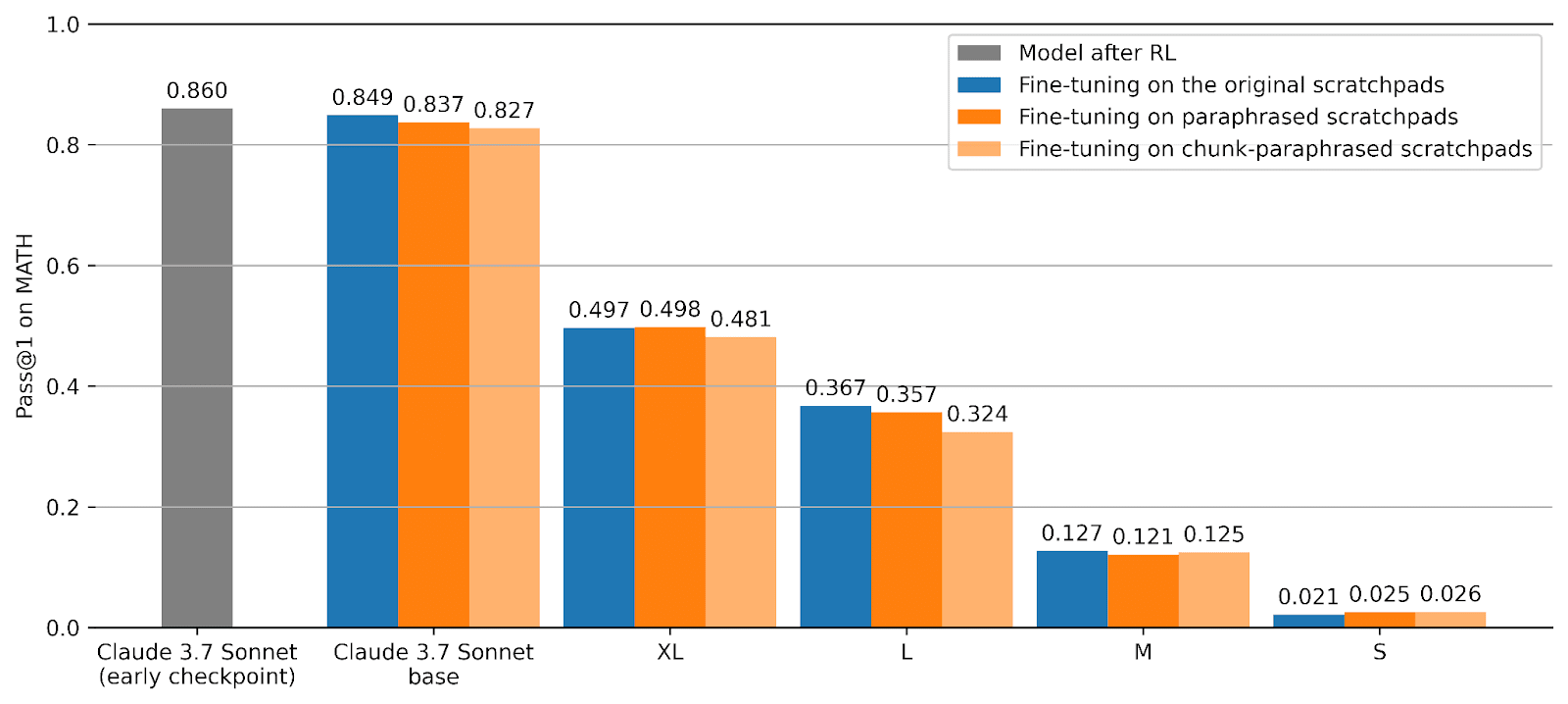

(02:57) Different paraphrasers and base models

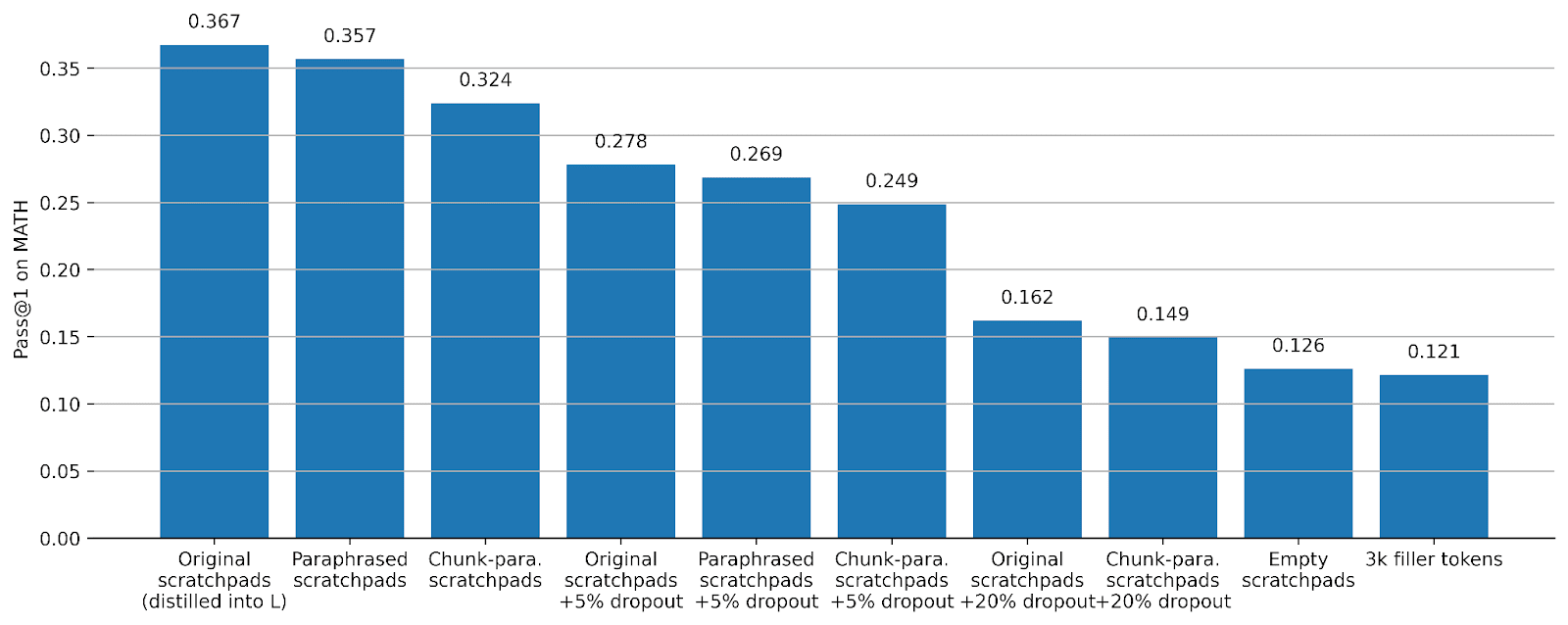

(04:14) Using word dropout and filler tokens

(05:32) Examples of paraphrased scratchpads

The original text contained 8 footnotes which were omitted from this narration.

---

First published:

March 11th, 2025

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.