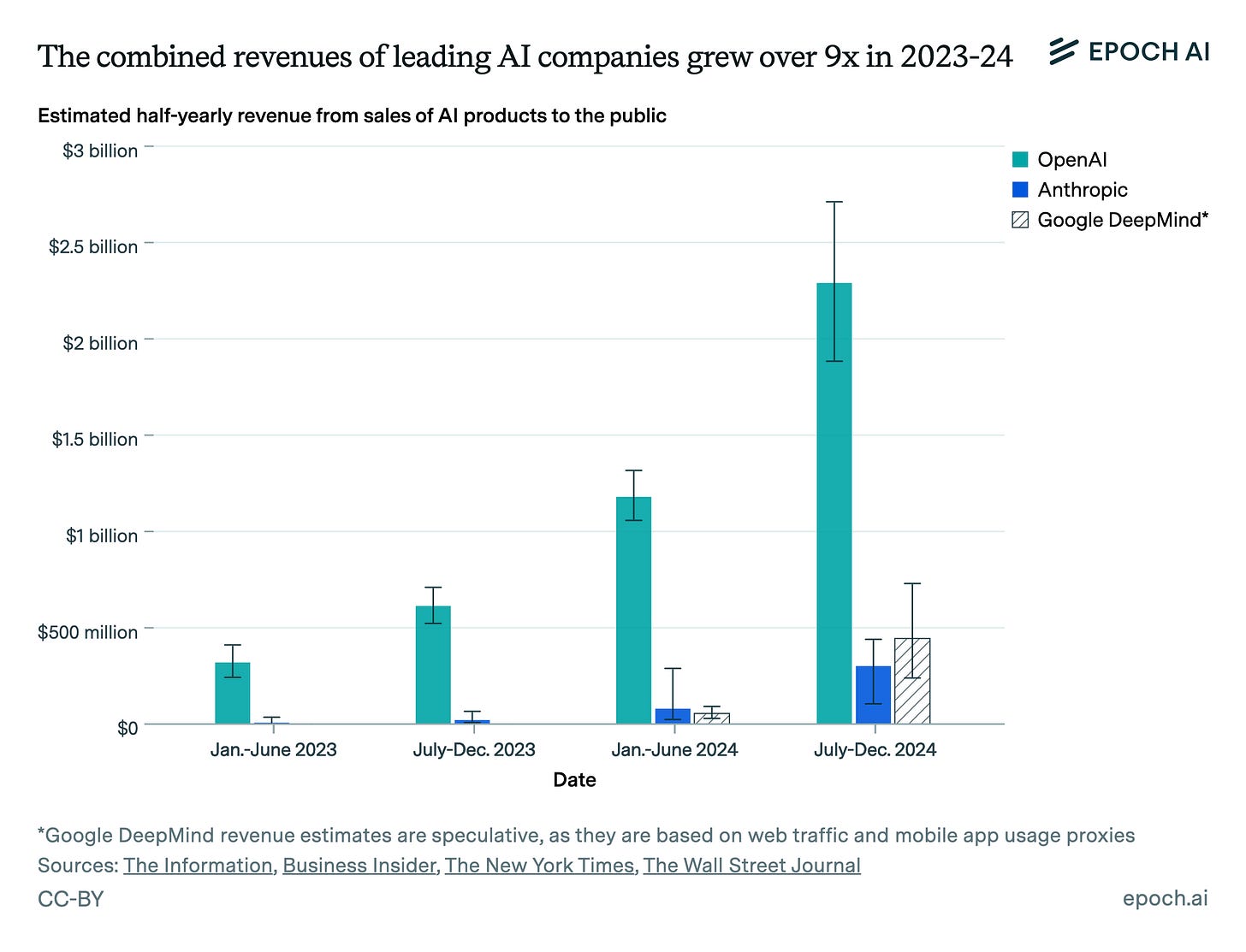

In recent months, the CEOs of leading AI companies have grown increasingly confident about rapid progress:

-

OpenAI's Sam Altman: Shifted from saying in November “the rate of progress continues” to declaring in January “we are now confident we know how to build AGI”

-

Anthropic's Dario Amodei: Stated in January “I’m more confident than I’ve ever been that we’re close to powerful capabilities… in the next 2-3 years”

-

Google DeepMind's Demis Hassabis: Changed from “as soon as 10 years” in autumn to “probably three to five years away” by January.

What explains the shift? Is it just hype? Or could we really have Artificial General [...]

---

Outline:

(04:12) In a nutshell

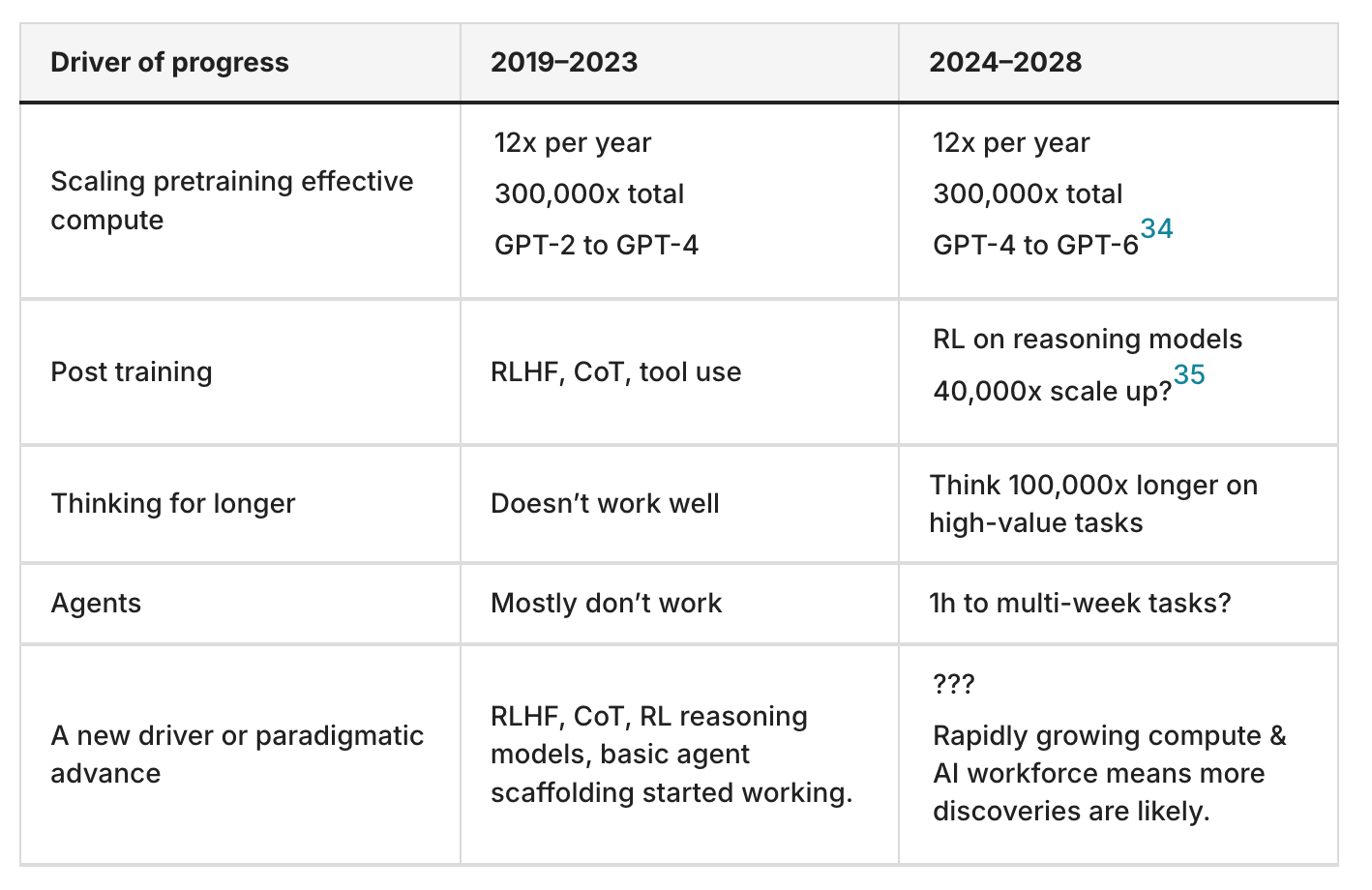

(05:54) I. What's driven recent AI progress? And will it continue?

(06:00) The deep learning era

(08:33) What's coming up

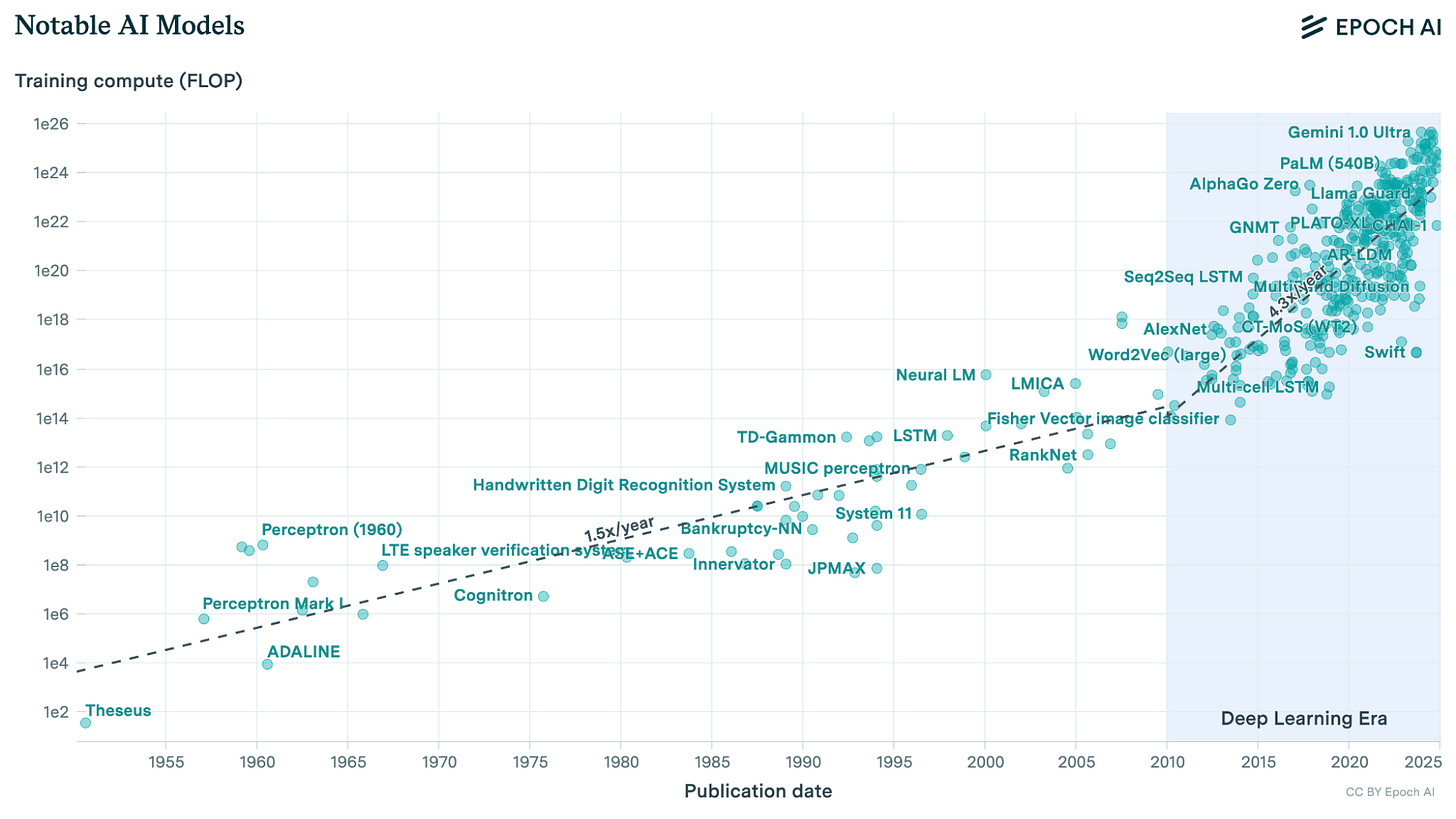

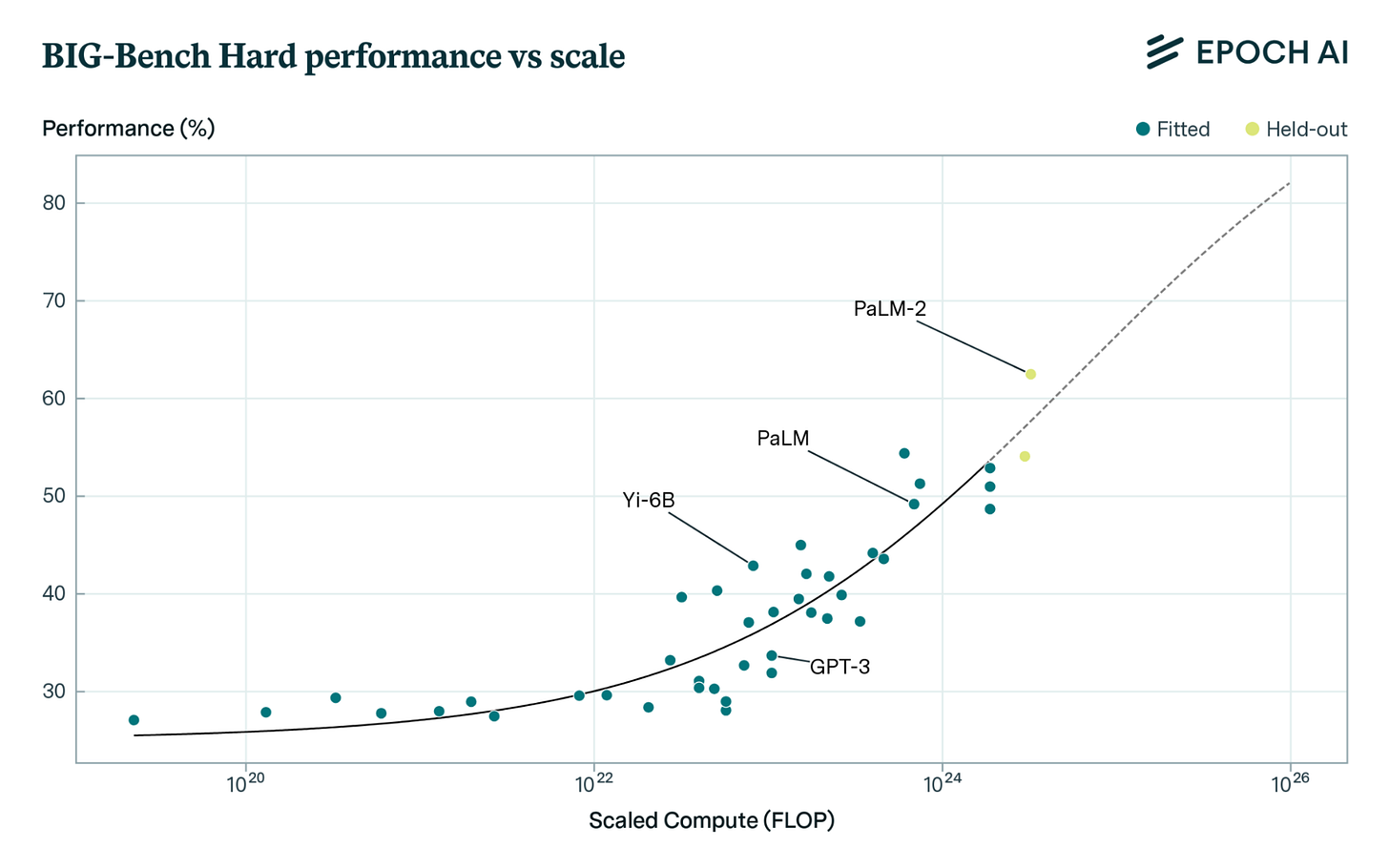

(09:35) 1. Scaling pretraining to create base models with basic intelligence

(09:42) Pretraining compute

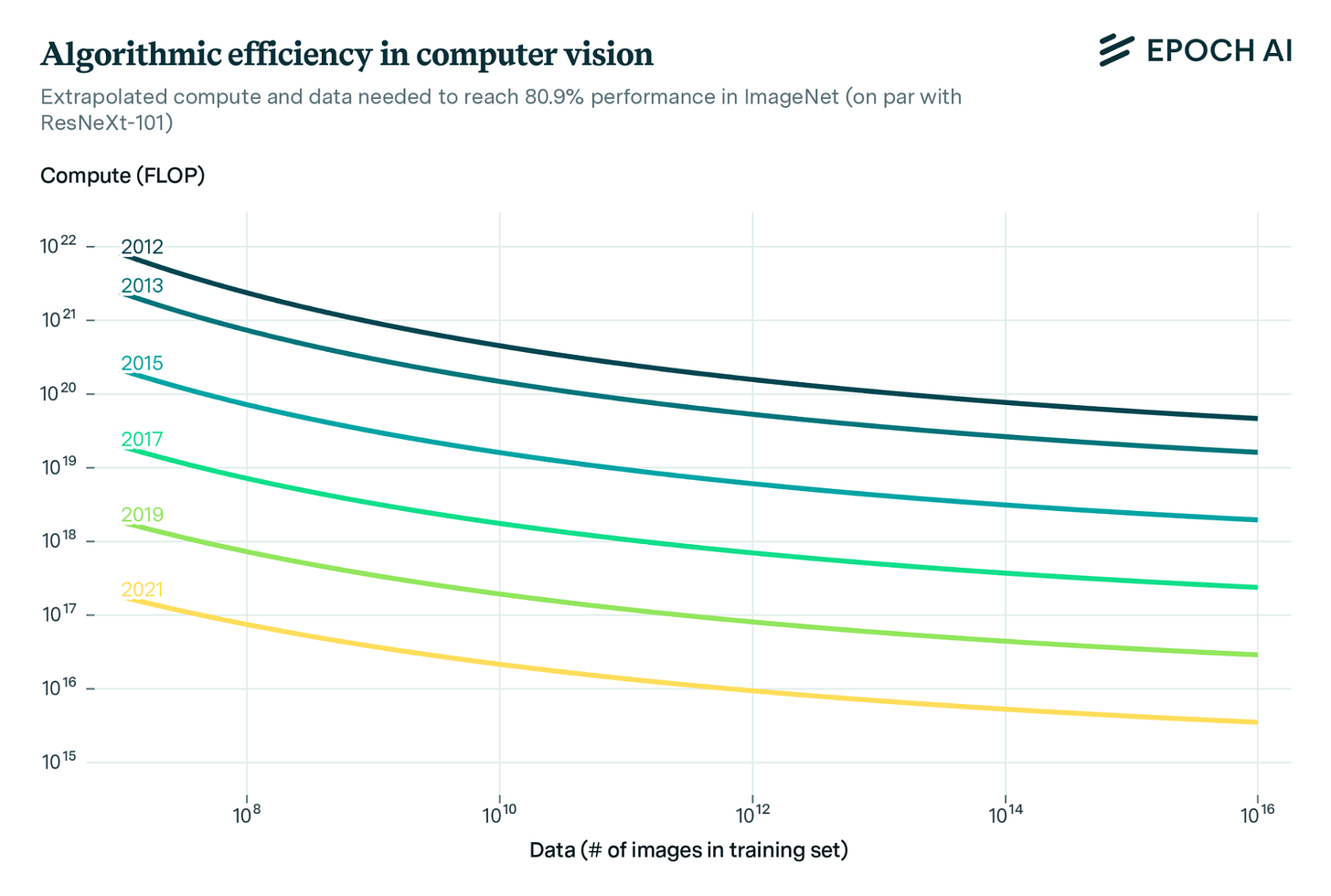

(13:14) Algorithmic efficiency

(15:08) How much further can pretraining scale?

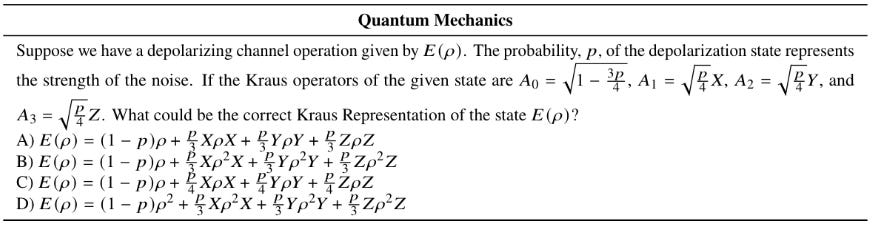

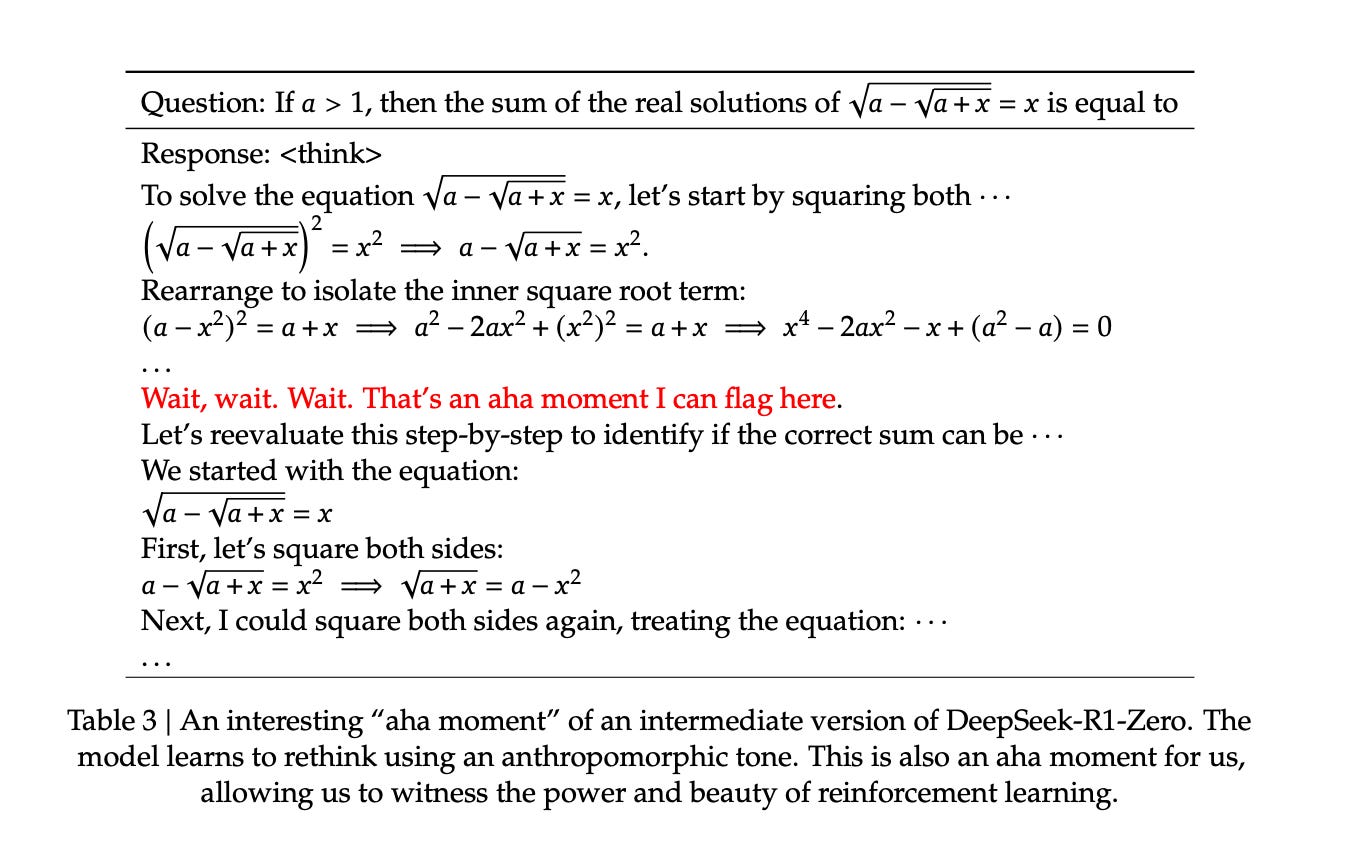

(16:58) 2. Post training of reasoning models with reinforcement learning

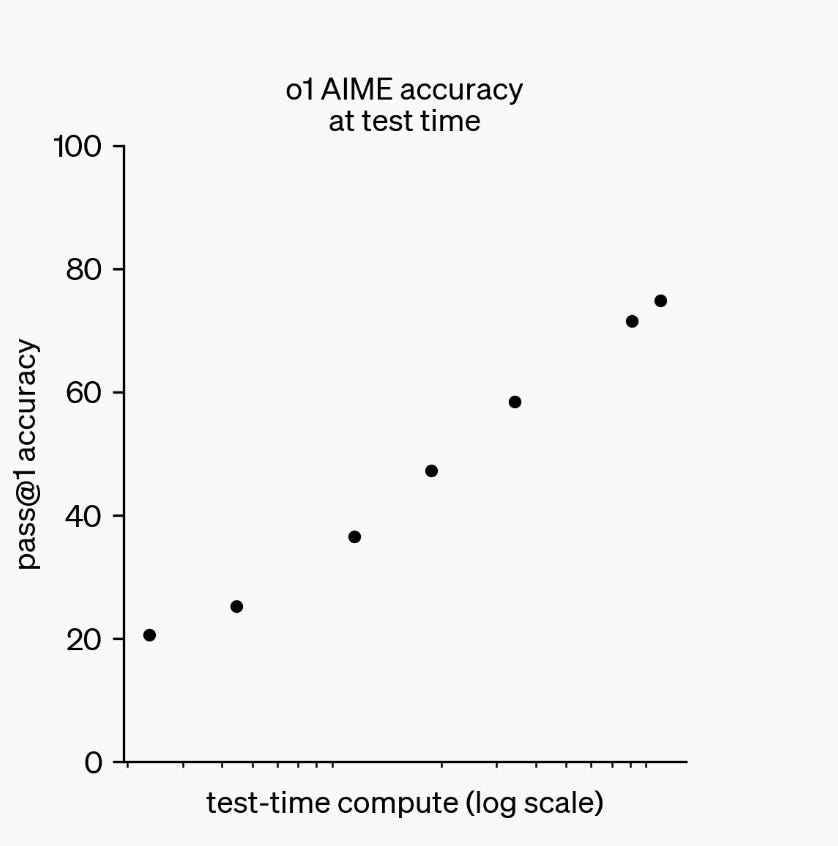

(22:50) How far can scaling reasoning models continue?

(26:09) 3. Increasing how long models think

(29:04) 4. The next stage: building better agents

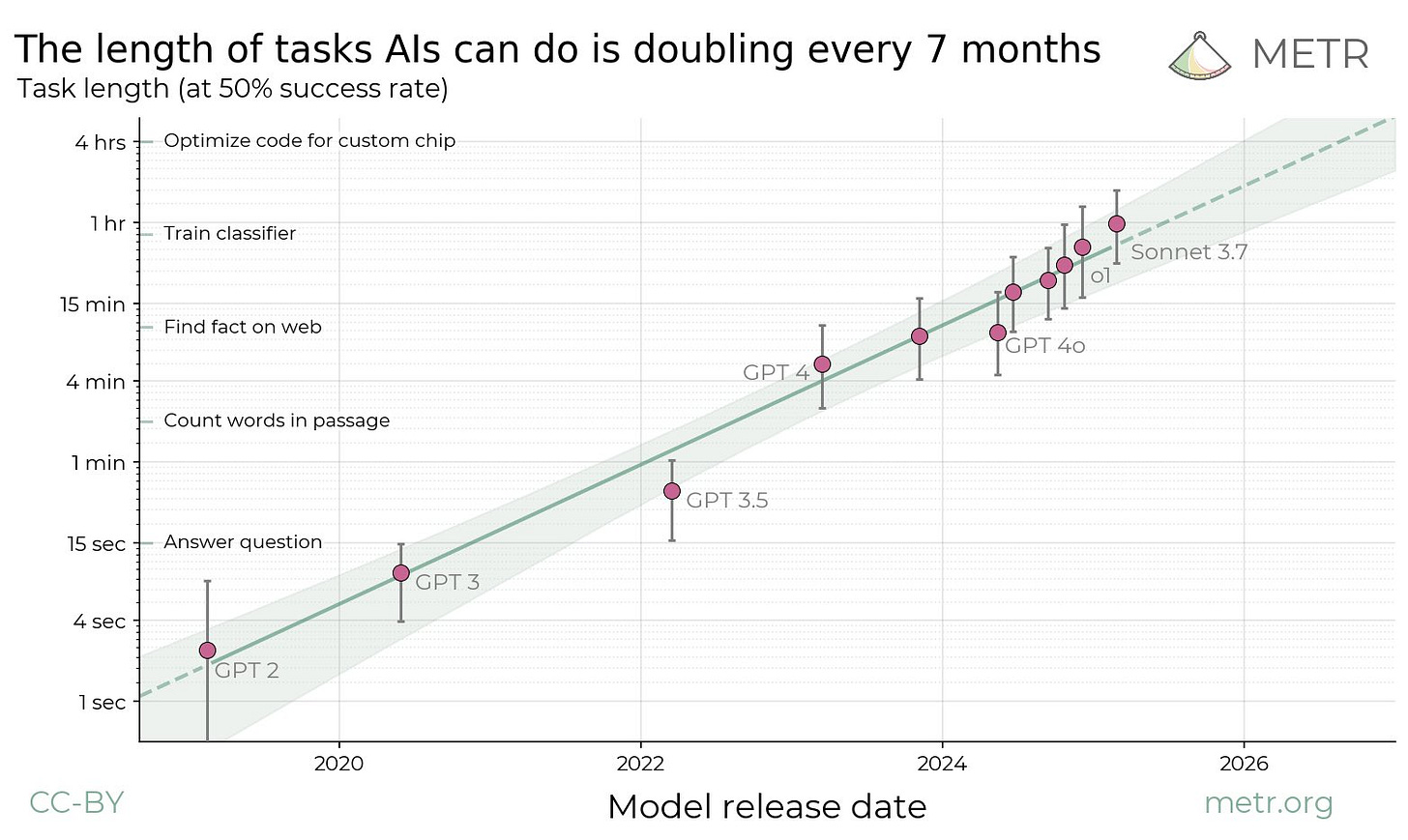

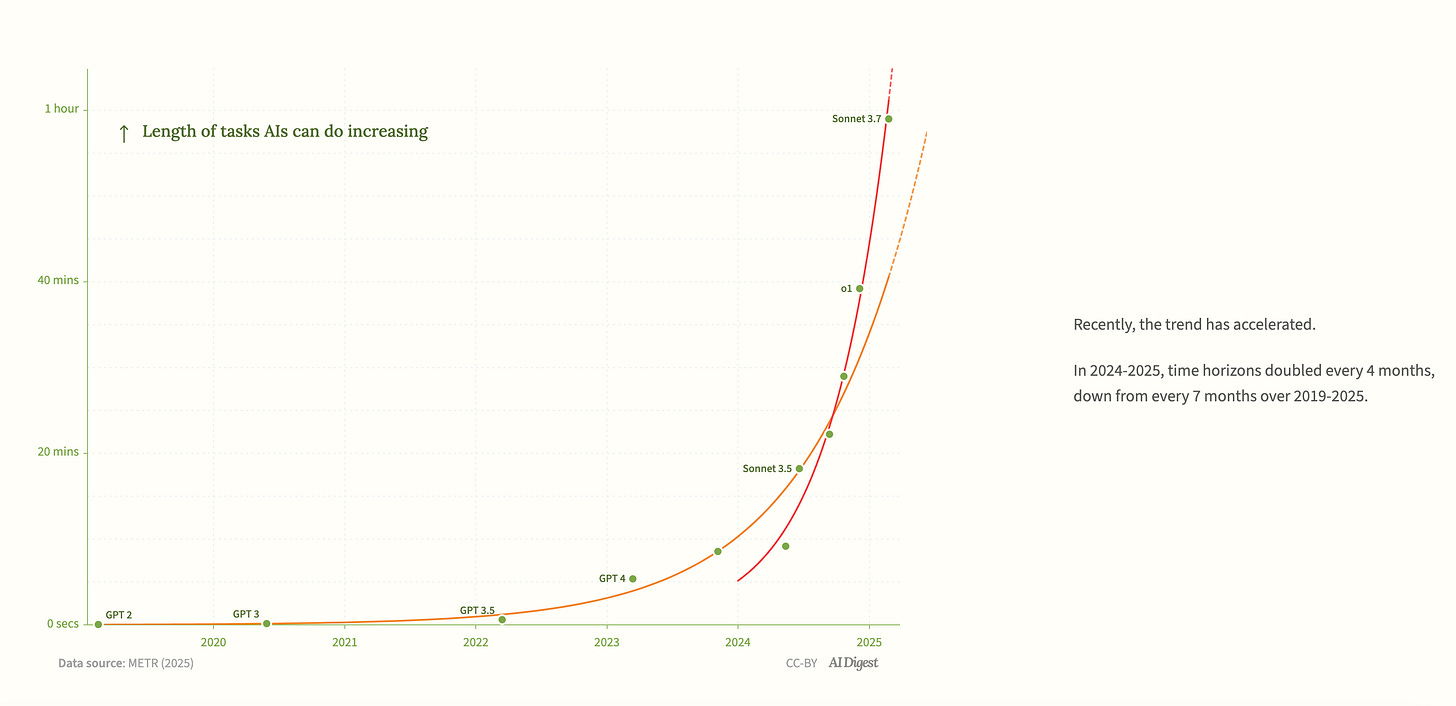

(34:59) How far can the trend of improving agents continue?

(37:15) II. How good will AI become by 2030?

(37:20) The four drivers projected forwards

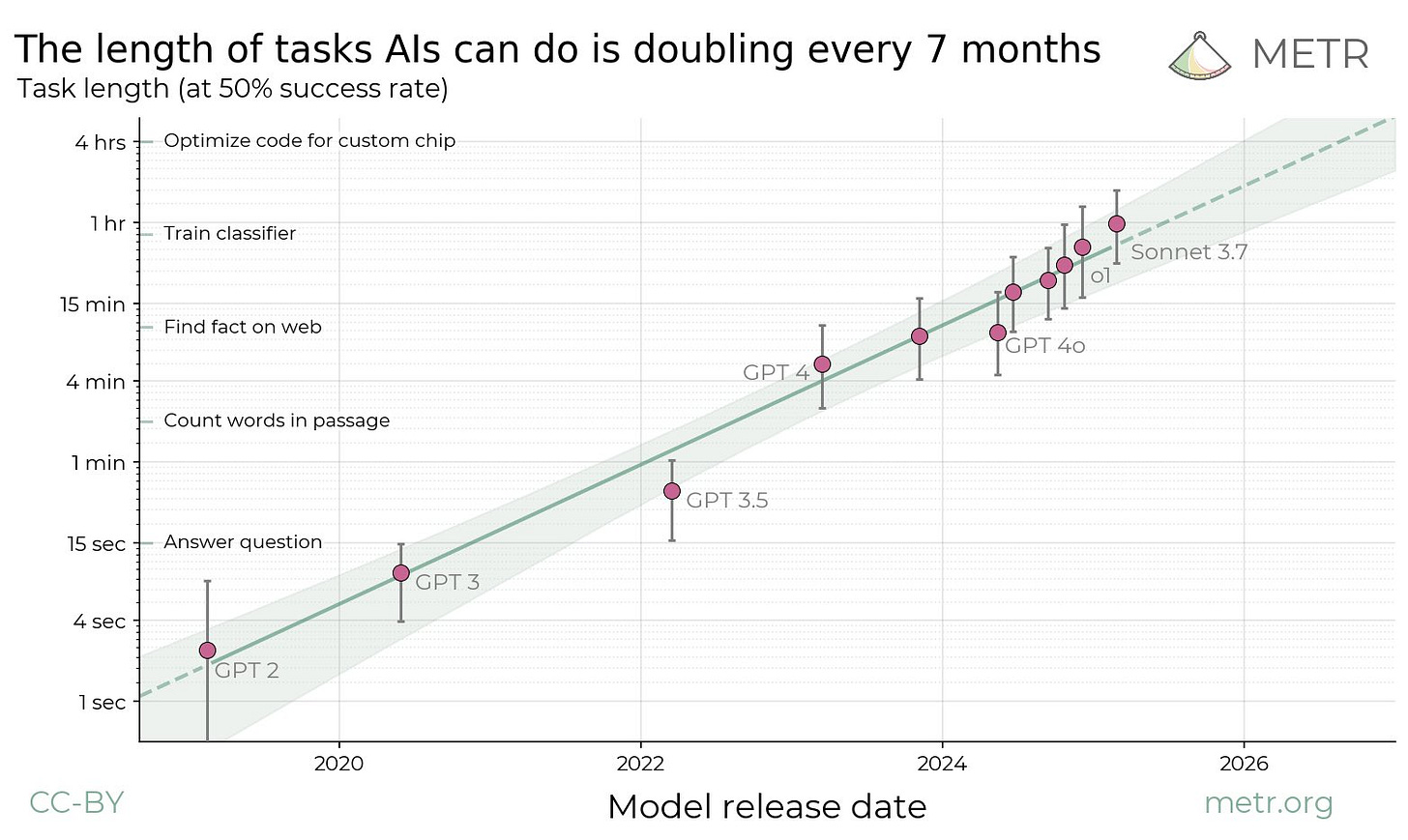

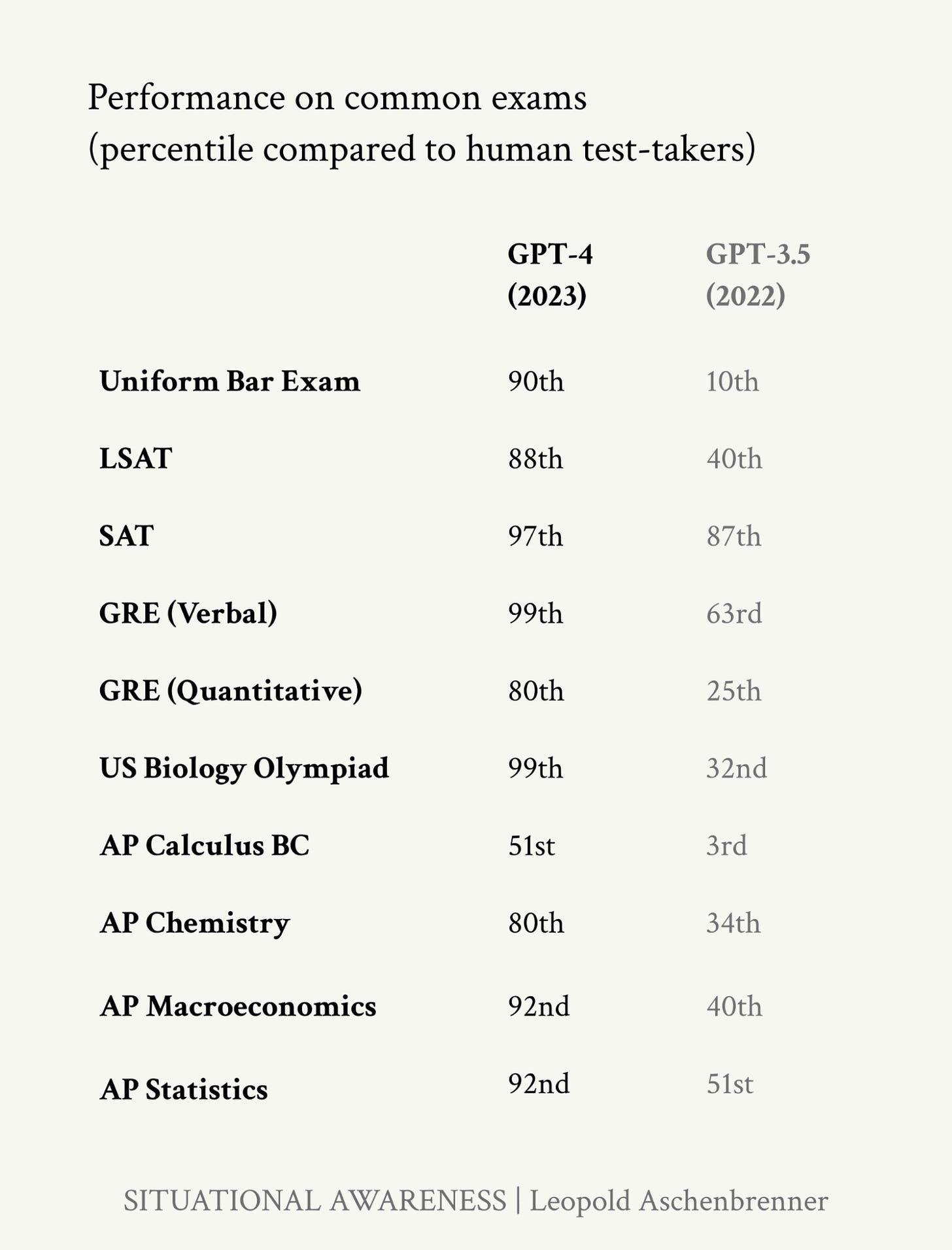

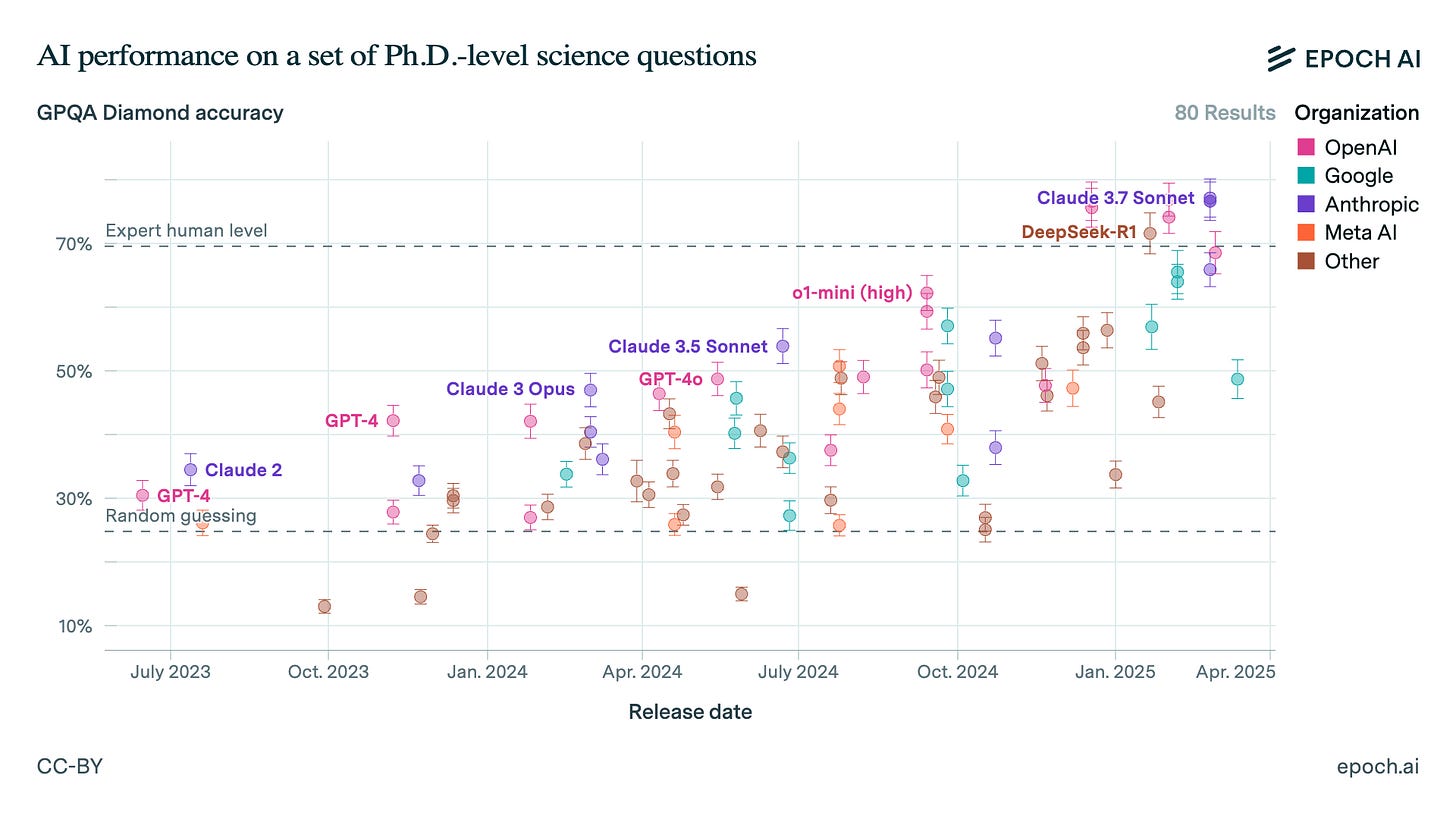

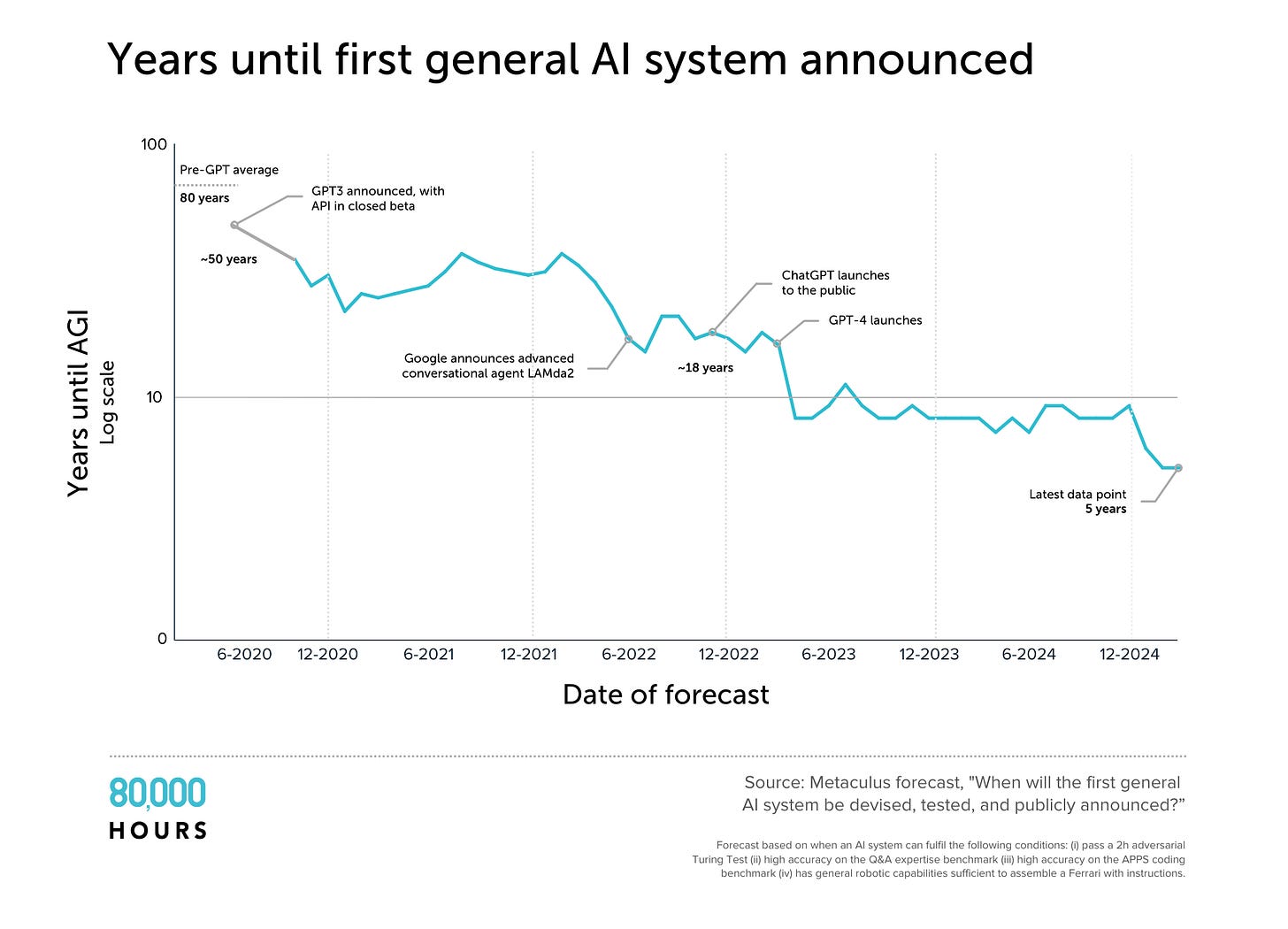

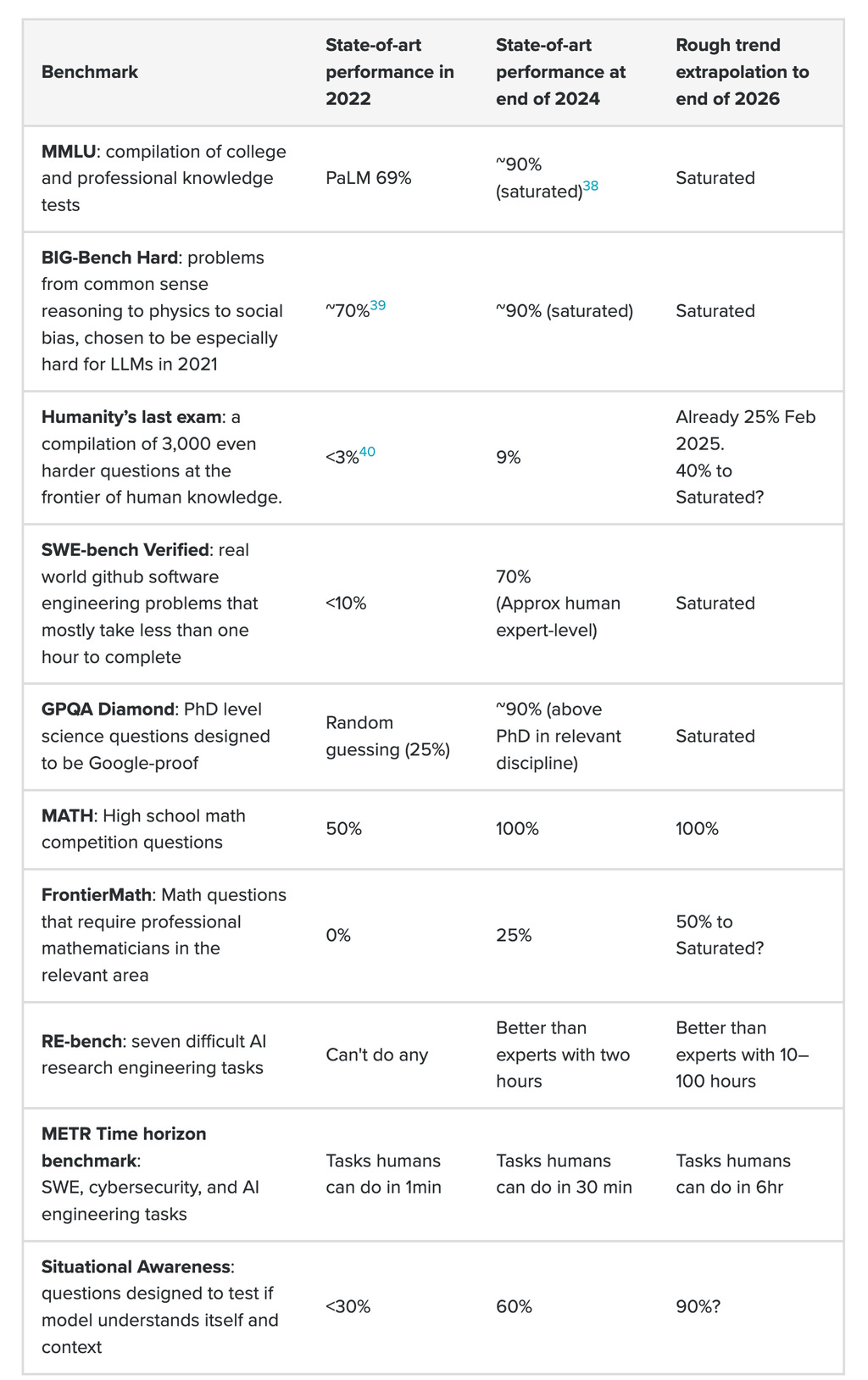

(39:01) Trend extrapolation of AI capabilities

(40:19) What jobs would these systems be able to help with?

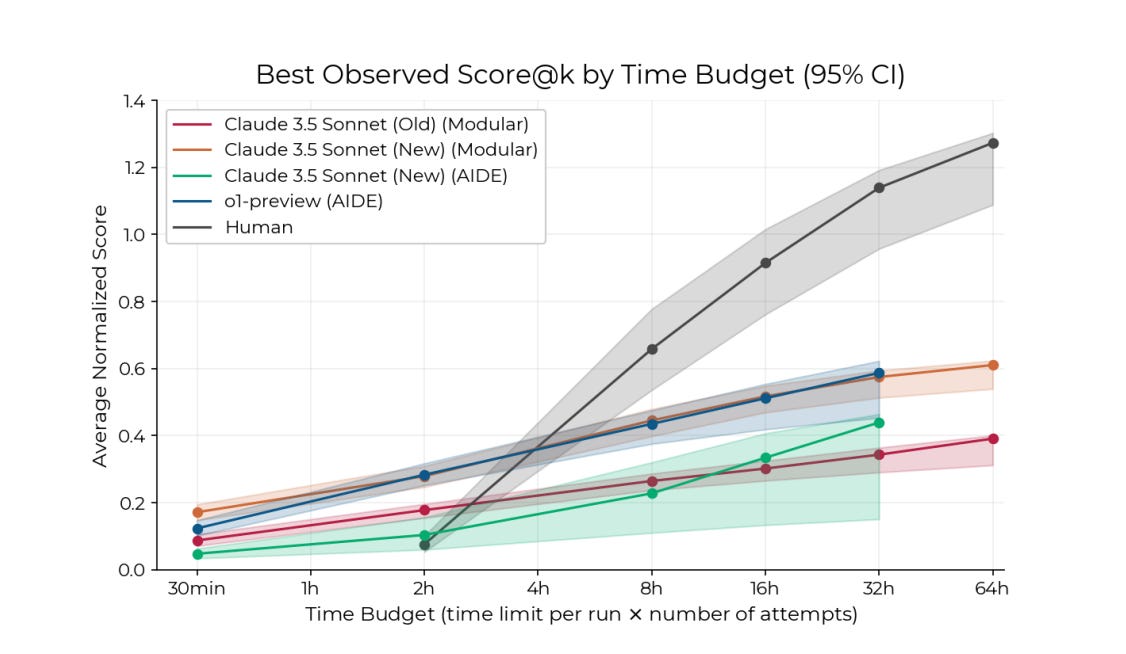

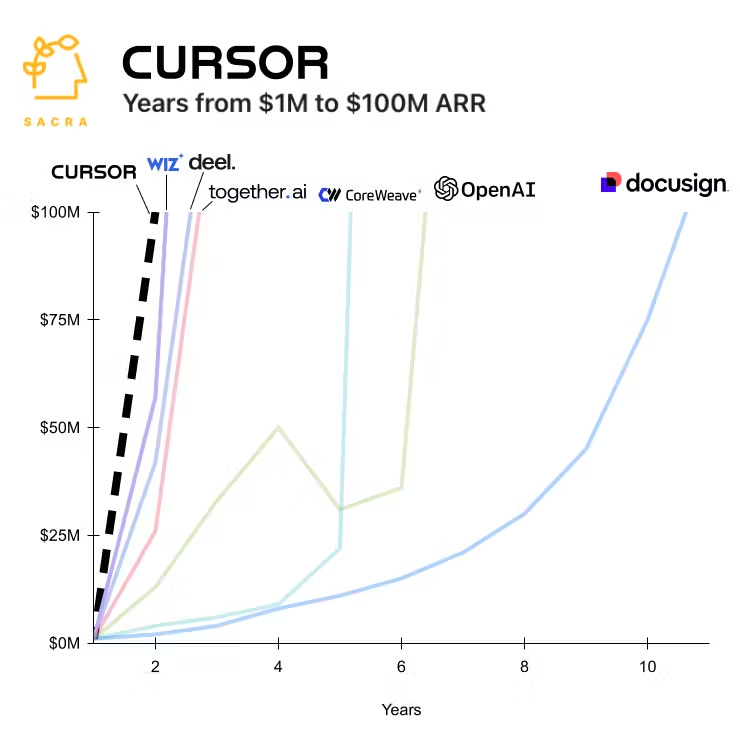

(41:11) Software engineering

(42:34) Scientific research

(43:45) AI research

(44:57) What's the case against impressive AI progress by 2030?

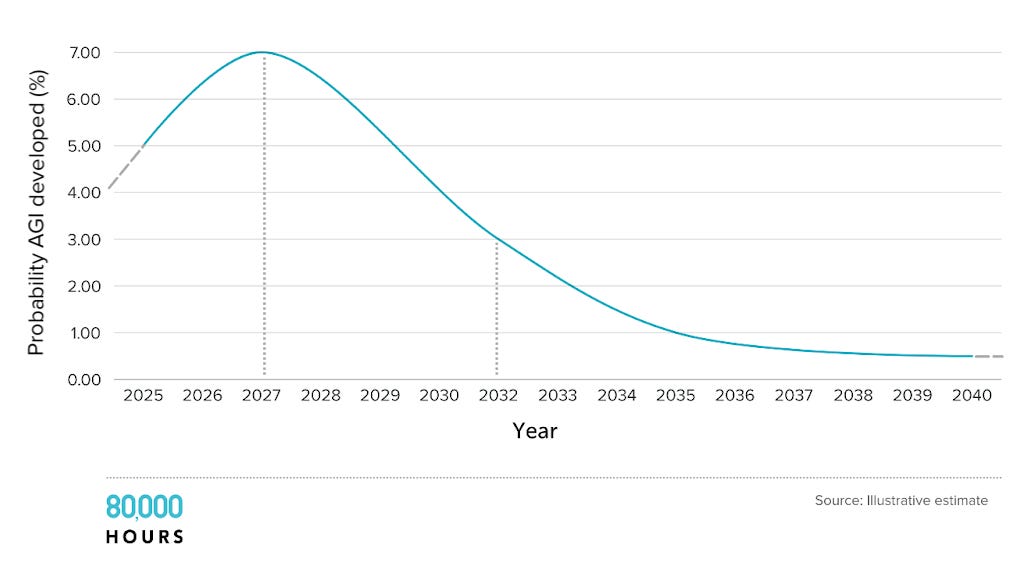

(49:39) When do the 'experts' expect AGI to arrive?

(51:04) III. Why the next 5 years are crucial

(52:07) Bottlenecks around 2030

(55:49) Two potential futures for AI

(57:52) Conclusion

(59:08) Use your career to tackle this issue

(59:32) Further reading

The original text contained 47 footnotes which were omitted from this narration.

---

First published:

April 9th, 2025

Source:

https://www.lesswrong.com/posts/NkwHxQ67MMXNqRnsR/the-case-for-agi-by-2030

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.