- Affärsnyheter

- Alternativ hälsa

- Amerikansk fotboll

- Andlighet

- Animering och manga

- Astronomi

- Barn och familj

- Basket

- Berättelser för barn

- Böcker

- Buddhism

- Dagliga nyheter

- Dans och teater

- Design

- Djur

- Dokumentär

- Drama

- Efterprogram

- Entreprenörskap

- Fantasysporter

- Filmhistoria

- Filmintervjuer

- Filmrecensioner

- Filosofi

- Flyg

- Föräldraskap

- Fordon

- Fotboll

- Fritid

- Fysik

- Geovetenskap

- Golf

- Hälsa och motion

- Hantverk

- Hinduism

- Historia

- Hobbies

- Hockey

- Hus och trädgård

- Ideell

- Improvisering

- Investering

- Islam

- Judendom

- Karriär

- Kemi

- Komedi

- Komedifiktion

- Komediintervjuer

- Konst

- Kristendom

- Kurser

- Ledarskap

- Life Science

- Löpning

- Marknadsföring

- Mat

- Matematik

- Medicin

- Mental hälsa

- Mode och skönhet

- Motion

- Musik

- Musikhistoria

- Musikintervjuer

- Musikkommentarer

- Näringslära

- Näringsliv

- Natur

- Naturvetenskap

- Nyheter

- Nyhetskommentarer

- Personliga dagböcker

- Platser och resor

- Poddar

- Politik

- Relationer

- Religion

- Religion och spiritualitet

- Rugby

- Så gör man

- Sällskapsspel

- Samhälle och kultur

- Samhällsvetenskap

- Science fiction

- Sexualitet

- Simning

- Självhjälp

- Skönlitteratur

- Spel

- Sport

- Sportnyheter

- Språkkurs

- Stat och kommun

- Ståupp

- Tekniknyheter

- Teknologi

- Tennis

- TV och film

- TV-recensioner

- Underhållningsnyheter

- Utbildning

- Utbildning för barn

- Verkliga brott

- Vetenskap

- Vildmarken

- Visuell konst

Ubuntu Security Podcast

A fortnightly podcast talking about the latest developments and updates from the Ubuntu Security team, including a summary of recent security vulnerabilities and fixes as well as a discussion on some ...

242 avsnitt • Längd: 20 min • Månadsvis

Om podden

A fortnightly podcast talking about the latest developments and updates from the Ubuntu Security team, including a summary of recent security vulnerabilities and fixes as well as a discussion on some of the goings on in the wider Ubuntu Security community.

The podcast Ubuntu Security Podcast is created by Ubuntu Security Team. The podcast and the artwork on this page are embedded on this page using the public podcast feed (RSS).

Avsnitt

Episode 241

Overview

This week we take a deep dive into the latest Linux malware, GoblinRAT to look at how malware is evolving to stay stealthy and evade detection and how malware authors are learning from modern software development along the way.

GoblinRAT Linux Malware teardown

-

Solar 4RAYS team (Cyber Threat Research Center at SOLAR - Russian Cybersecurity firm) describes a new piece of Linux malware which they name GoblinRAT (RAT = Remote Access Trojan) 2023 when contacted by an IT company which provides services to (presumably) Russian government agencies - noticed system logs being deleted off one of their servers and a utility being downloaded to steal account passwords from a domain controller

-

Found this malware masquerading as a legitimate process which takes quite careful steps to avoid detection - in fact most of the functionality within the malware is devoted to hiding its presence on the target system

-

Doesn’t include automatic persistence but instead appears to be manually “installed” by the attackers with a unique name for each target where it would be named after an existing legitimate process on the target system - similarly even the names of its files and libraries were also unique per-system as well to avoid detection

- e.g. in one case where zabbix was running, the malware named itself

zabbix_agentand setup a new systemd service to launch itself at boot which looks identical to the real zabbix agent (except the real one iszabbix_agentd) and then once running it edits its own command-line arguments after startup to insert standard parameters expected by the realzabbix_agentdso that on aps auxor similar output it appears basically identical to the realzabbix_agentd - in another case it named itself

rhsmdto mimic the Red Hat subscription manager service again using systemd as the launcher, whilst for others asmemcachedusing cron to launch - C2 uses DDNS (Dynamic DNS) and legitimate hacked sites to hide in noise of

“normal” traffic - using DDNS attackers would use specifically named C2

machines per target host - in one case where it was named

chrony_debugto mimic thechronydtime synchronisation service, it would connect to C2 a machine namedchronyd.tftpd.net- attackers clearly went to a lot of work to hide this in plain sight

- e.g. in one case where zabbix was running, the malware named itself

-

Automatically deletes itself off the system if does not get pinged by the C2 operator after a certain period of time - and when it deletes itself it shreds itself to reduce the chance of being detected later via disk forensics etc

-

Has 2 versions - a “server” and “client” - the server uses port-knocking to watching incoming connection requests on a given network interface and then only actually allowing a connection if the expected sequence of port numbers was tried - this allows the controller of the malware to connect into it without the malware actively listening on a given port and hence reduces the chance it is detected accidentally

-

Client instead connects back to its specific C2 server

-

Logs collected by 4RAYS team appear to show the commands executed by the malware were quite manual looking - invoking bash and then later invoking commands like systemctl to stop and replace an existing service, where the time lag between commands is in the order of seconds - minutes and so would seem like these were manually typed command rather than automatically driven by scripts

-

Malware itself is implemented in Go and includes the ability to execute single commands as well as providing an interactive shell; also includes support for listing / copying / moving files including with compression; also works as a SOCKS5 proxy to allow it to proxy traffic to/from other hosts that may be behind more restrictive firewalls etc; and as detailed above the ability to mimic existing processes on the system to avoid detection

-

To try and frustrate reverse engineering Gobfuscate was used to obfuscate the compiled code - odd choice though since this project was seemingly abandonded 3 years ago and nowadays garble seems to be the go-to tool for this (no pun intended)- but perhaps this is evidence of the time of the campaign since these samples were all found back in 2020 which this project was more active…

-

Encrypts its configuration using AES-GCM and the config contains details like the shell to invoke, kill-switch delay and secret value to use to disable it, alternate process name to use plus the TLS certificate and keys to use when communicating with the C2 server

-

Uses the yamux Go connection multiplexing library then to multiplex the single TLS connection to/from the C2 server

-

Can then be instructed to perform the various actions like running commands / launching a shell / list files in a directory / reading files etc as discussed before

-

Other interesting part is the kill switch / self-destruct functionality - if kill switch delay is specified in the encrypted configuration malware will automatically delete itself by invoking dd to overwrite itself with input from /dev/urandom 8 times; once more with 0 bytes and finally then removing the file from disk

-

Overall 4 organisations were found to have been hacked with this and in each it was running with full admin rights - with some running for over 3 years - and various binaries show compilation dates and golang toolchain versions indicating this was developed since at least 2020

-

But unlike other malware that we have covered, it does not appear to be a more widespread campaign since “other information security companies with global sensor networks” couldn’t find any similar samples in their own collections

-

No clear evidence of origin - Solar 4RAYS asking for other cybersecurity companies to help contribute to their evidence to identify the attackers

-

Interesting to see the evolution of malware mirrors that of normal software development - no longer using C/C++ etc but more modern languages like Go which provide exactly the sorts of functionality you want in your malware - systems-level programming functionality with built-in concurrency and memory safety - also Go binaries are statically linked so no need to worry about dependencies on the target system

Get in contact

Episode 240

Overview

For the third and final part in our series for Cybersecurity Awareness Month, Alex is again joined by Luci as well as Diogo Sousa to discuss future trends in cybersecurity and the likely threats of the future.

Get in contact

Episode 239

Overview

In the second part of our series for Cybersecurity Awareness Month, Luci is back with Alex, along with Eduardo Barretto to discuss our top cybersecurity best practices.

Get in contact

Episode 238

Overview

For the first in a 3-part series for Cybersecurity Awareness month, Luci Stanescu joins Alex to discuss the recent CUPS vulnerabilities as well as the evolution of cybersecurity since the origin of the internet.

Get in contact

Episode 237

Overview

John and Maximé have been talking about Ubuntu’s AppArmor user namespace restrictions at the the Linux Security Summit in Europe this past week, plus we cover some more details from the official announcement of permission prompting in Ubuntu 24.10, a new release of Intel TDX for Ubuntu 24.04 LTS and more.

This week in Ubuntu Security Updates (01:11)

613 unique CVEs addressed in the past fortnight

[USN-6989-1] OpenStack vulnerability

- 1 CVEs addressed in Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6990-1] znc vulnerability

- 1 CVEs addressed in Trusty ESM (14.04 ESM), Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6992-1] Firefox vulnerabilities

- 8 CVEs addressed in Focal (20.04 LTS)

[USN-6993-1] Vim vulnerabilities

- 2 CVEs addressed in Trusty ESM (14.04 ESM), Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6991-1] AIOHTTP vulnerability

- 1 CVEs addressed in Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6995-1] Thunderbird vulnerabilities

- 10 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS)

[USN-6996-1] WebKitGTK vulnerabilities

- 6 CVEs addressed in Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6841-2] PHP vulnerability

- 1 CVEs addressed in Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM)

[USN-6997-1, USN-6997-2] LibTIFF vulnerability

- 1 CVEs addressed in Trusty ESM (14.04 ESM), Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6994-1] Netty vulnerabilities

- 2 CVEs addressed in Jammy (22.04 LTS)

- HTTP/2 DoS, seen exploited in the wild and listen on the CISA KEV

[USN-6998-1] Unbound vulnerabilities

- 2 CVEs addressed in Trusty ESM (14.04 ESM), Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6999-1] Linux kernel vulnerabilities

- 220 CVEs addressed in Noble (24.04 LTS)

- Full CVE list elided - see USN for details

[USN-7003-1, USN-7003-2, USN-7003-3] Linux kernel vulnerabilities

- 85 CVEs addressed in Bionic ESM (18.04 ESM), Focal (20.04 LTS)

- Full CVE list elided - see USN for details

[USN-7004-1] Linux kernel vulnerabilities

- 221 CVEs addressed in Noble (24.04 LTS)

- Full CVE list elided - see USN for details

[USN-7005-1, USN-7005-2] Linux kernel vulnerabilities

- 219 CVEs addressed in Jammy (22.04 LTS), Noble (24.04 LTS)

- Full CVE list elided - see USN for details

[USN-7006-1] Linux kernel vulnerabilities

- 94 CVEs addressed in Focal (20.04 LTS)

- Full CVE list elided - see USN for details

[USN-7007-1] Linux kernel vulnerabilities

- 219 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS)

- Full CVE list elided - see USN for details

[USN-7008-1] Linux kernel vulnerabilities

- 222 CVEs addressed in Jammy (22.04 LTS)

- Full CVE list elided - see USN for details

[USN-7009-1] Linux kernel vulnerabilities

- 219 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS)

- Full CVE list elided - see USN for details

[USN-7019-1] Linux kernel vulnerabilities

- 429 CVEs addressed in Jammy (22.04 LTS)

- Full CVE list elided - see USN for details

[USN-7002-1] Setuptools vulnerability

- 1 CVEs addressed in Trusty ESM (14.04 ESM), Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-7000-1, USN-7000-2] Expat vulnerabilities

- 3 CVEs addressed in Trusty ESM (14.04 ESM), Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-7001-1, USN-7001-2] xmltok library vulnerabilities

- 2 CVEs addressed in Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6560-3] OpenSSH vulnerability

- 1 CVEs addressed in Xenial ESM (16.04 ESM)

[USN-7011-1, USN-7011-2] ClamAV vulnerabilities

- 2 CVEs addressed in Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-7012-1] curl vulnerability

- 1 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-7013-1] Dovecot vulnerabilities

- 2 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS)

[USN-7014-1] nginx vulnerability

- 1 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-7015-1] Python vulnerabilities

- 5 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-7010-1] DCMTK vulnerabilities

- 9 CVEs addressed in Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-7016-1] FRR vulnerability

- 1 CVEs addressed in Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-7017-1] Quagga vulnerability

- 1 CVEs addressed in Focal (20.04 LTS)

[USN-7018-1] OpenSSL vulnerabilities

- 6 CVEs addressed in Trusty ESM (14.04 ESM)

Goings on in Ubuntu Security Community

Linux Security Summit Europe 2024 (03:44)

- https://events.linuxfoundation.org/linux-security-summit-europe/program/schedule/

- Sep 16-17 - Vienna, Austria

- John Johansen and Maxime Bélair from AppArmor team presented “Restricting Unprivileged User Namespaces in Ubuntu”

- Other talks

- Deep-dive into xz-utils supply chain attack

- Internals of the SLUB memory allocator for exploit developers

- Landlock update - including details of new IOCTL restrictions etc

- systemd and TPM2 update

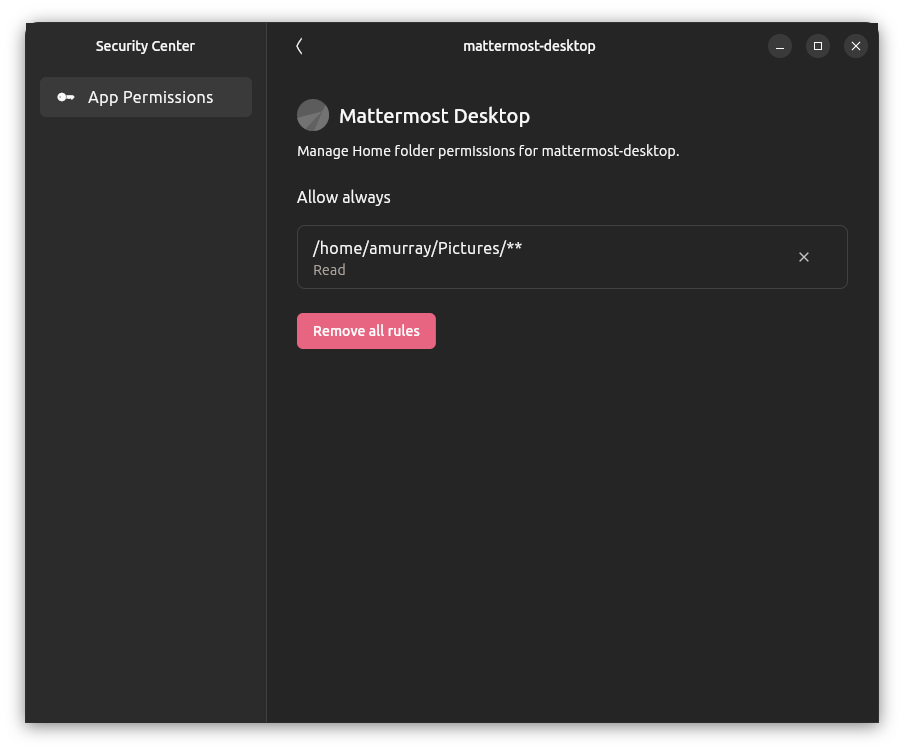

Official announcement of Permissions Prompting in Ubuntu 24.10 (09:00)

- https://discourse.ubuntu.com/t/ubuntu-desktop-s-24-10-dev-cycle-part-5-introducing-permissions-prompting/47963

- Ubuntu Security Center with snapd-based AppArmor home file access prompting preview in episode 236

- Even works for command-line applications etc - not just graphical

- Covers future developments as well:

- Better default response suggestions based on user feedback.

- Shell integration of the prompting pop-ups (eg full screen takeovers)

- Improved rule management summaries and better messaging of overlapping or redundant prompts.

- Expansion of the prompting system to cover additional snap interfaces such as camera and microphone access.

- Smarter client side analysis of prompts, recommending additional options if multiple similar prompts are detected.

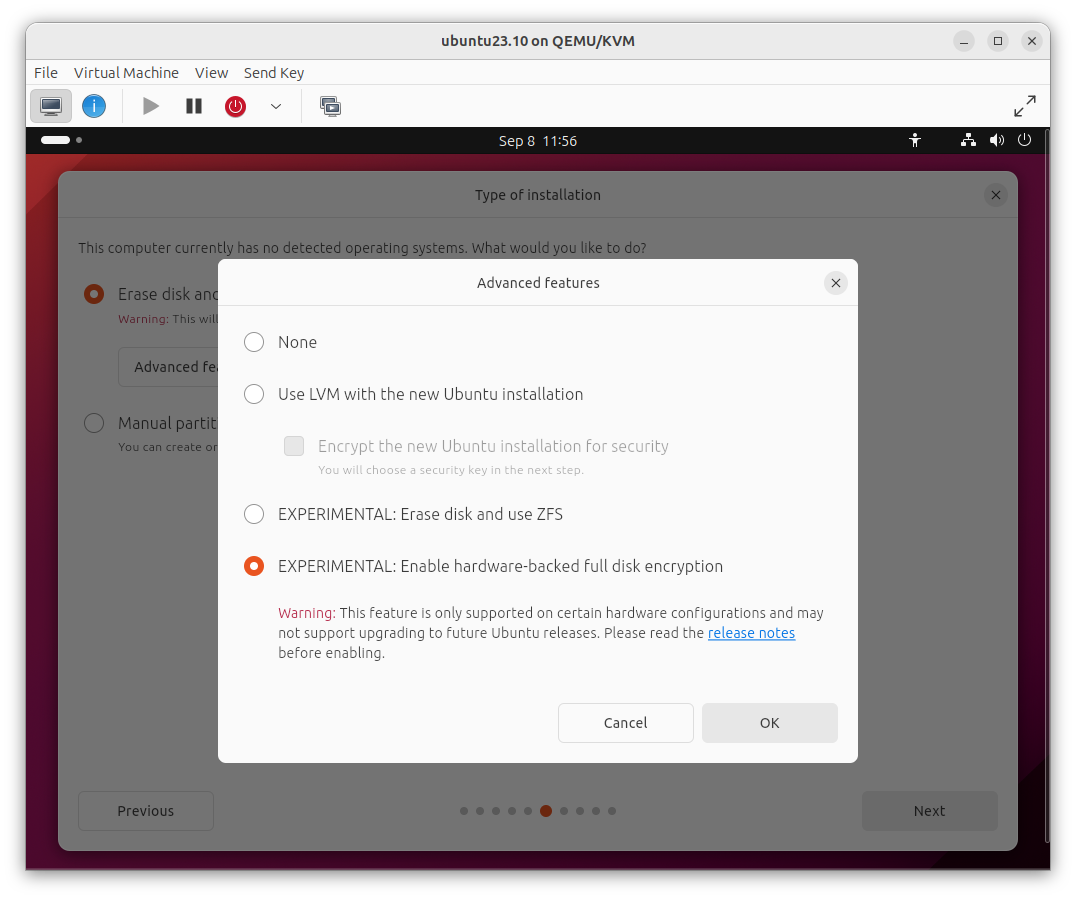

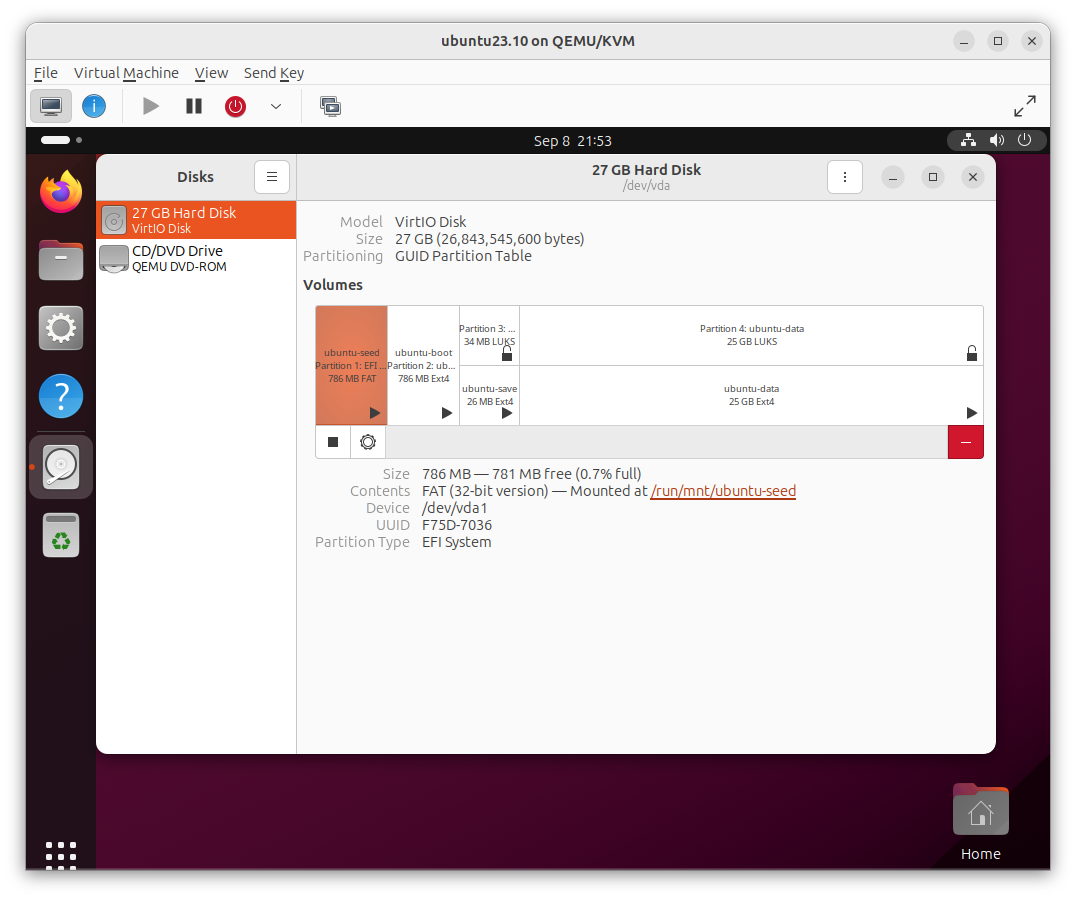

Version 2.1 of IntelⓇ TDX on Ubuntu 24.04 LTS Released (11:46)

- https://discourse.ubuntu.com/t/version-2-1-of-intel-tdx-on-ubuntu-24-04-lts-released/47918/1

- Confidential computing - using TDX to run VMs in confidential mode - runs workloads (VMs) in hardware-backed isolated execution environments (Trust Domains). VM memory isolation via encryption in hardware so can’t be accessed by the hypervisor, remote attestation etc (Confidential Computing with Ijlal Loutfi and Karen Horovitz from Episode 230)

- https://discourse.ubuntu.com/t/intel-tdx-1-0-technology-preview-available-on-ubuntu-23-10/40698

- Scripting to setup the required elements to use TDX on Ubuntu 24.04 host and

then setup guest VMs to run in confidential mode

- Install server image, run scripts, enable TDX in BIOS, create VM images etc

- Can also configure remote attestation of VM too

- See full changes at https://github.com/canonical/tdx/releases/tag/2.1

Ubuntu 22.04.5 LTS released (13:45)

- https://discourse.ubuntu.com/t/jammy-jellyfish-point-release-changes/29835/8

- Only covers changes in main and restricted, doesn’t list security updates either

- https://discourse.ubuntu.com/t/jammy-jellyfish-release-notes/24668

AppArmor security update for CVE-2016-1585 published (14:23)

- Upcoming AppArmor Security update for CVE-2016-1585 from Episode 226

- https://discourse.ubuntu.com/t/upcoming-apparmor-security-update-for-cve-2016-1585/44268/3

- Now published to -updates pocket for 20.04 LTS and 22.04 LTS

- Will be published to -security pocket next week

Get in contact

Episode 236

Overview

The long awaited preview of snapd-based AppArmor file prompting is finally seeing the light of day, plus we cover the recent 24.04.1 LTS release and the podcast officially moves to a fortnightly cycle.

This week in Ubuntu Security Updates

45 unique CVEs addressed

[USN-6972-4] Linux kernel (Oracle) vulnerabilities

- 18 CVEs addressed in Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM)

[USN-6982-1] Dovecot vulnerabilities

- 2 CVEs addressed in Noble (24.04 LTS)

[USN-6983-1] FFmpeg vulnerability

- 1 CVEs addressed in Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6984-1] WebOb vulnerability

- 1 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6973-4] Linux kernel (Raspberry Pi) vulnerabilities

- 9 CVEs addressed in Bionic ESM (18.04 ESM)

[USN-6981-2] Drupal vulnerabilities

- 3 CVEs addressed in Trusty ESM (14.04 ESM)

- 2 of these are in the CISA KEV - Discussion of CISA KEV from Episode 231

[USN-6986-1] OpenSSL vulnerability

- 1 CVEs addressed in Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6987-1] Django vulnerabilities

- 2 CVEs addressed in Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6988-1] Twisted vulnerabilities

- 2 CVEs addressed in Trusty ESM (14.04 ESM), Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6985-1] ImageMagick vulnerabilities

- 11 CVEs addressed in Trusty ESM (14.04 ESM)

Goings on in Ubuntu Security Community

Ubuntu 24.04.1 LTS released (02:55)

- On 29th August - https://lists.ubuntu.com/archives/ubuntu-announce/2024-August/000304.html

- https://discourse.ubuntu.com/t/ubuntu-24-04-lts-noble-numbat-release-notes/39890

- Discussed high level features previously in Ubuntu 24.04 LTS (Noble Numbat) released from Episode 227

- New security features / improvements:

- Unprivileged user namespace restrictions

- Binary hardening

- AppArmor 4

- Disabling of old TLS versions

- Upstream Kernel Security Features

- Intel shadow stack support

- Secure virtualisation with AMD SEV-SNP and Intel TDX

- Strict compile-time bounds checking

- New security features / improvements:

- Initially offered upgrades from 22.04 but this has been pulled just recently

due to reports of a critical bug in the ubuntu-release-upgrader package and

its interaction with the apt solver - essentially resulting in packages like

linux-headers being in an broken state since it would remove some packages

that were seen as obsolete but which were still required due to other packages

depending on them

- likely will not be fixed until early next week

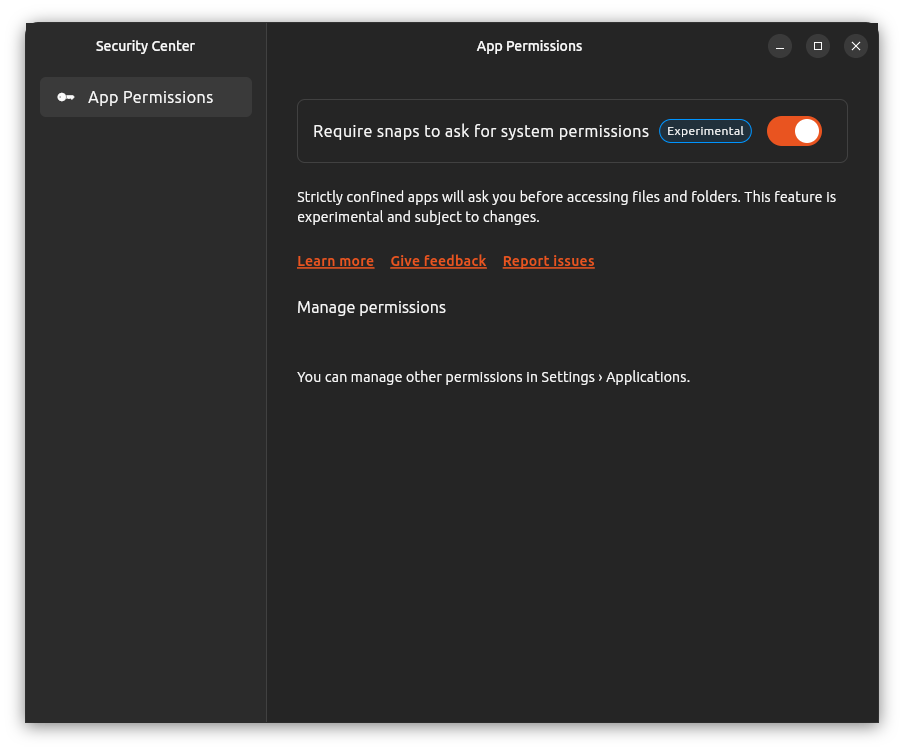

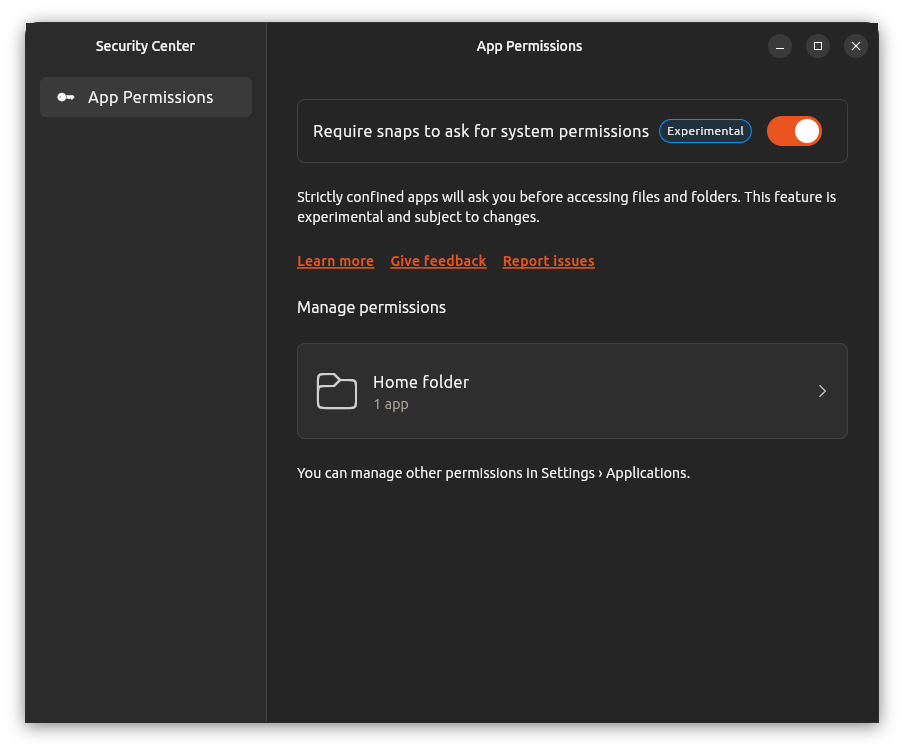

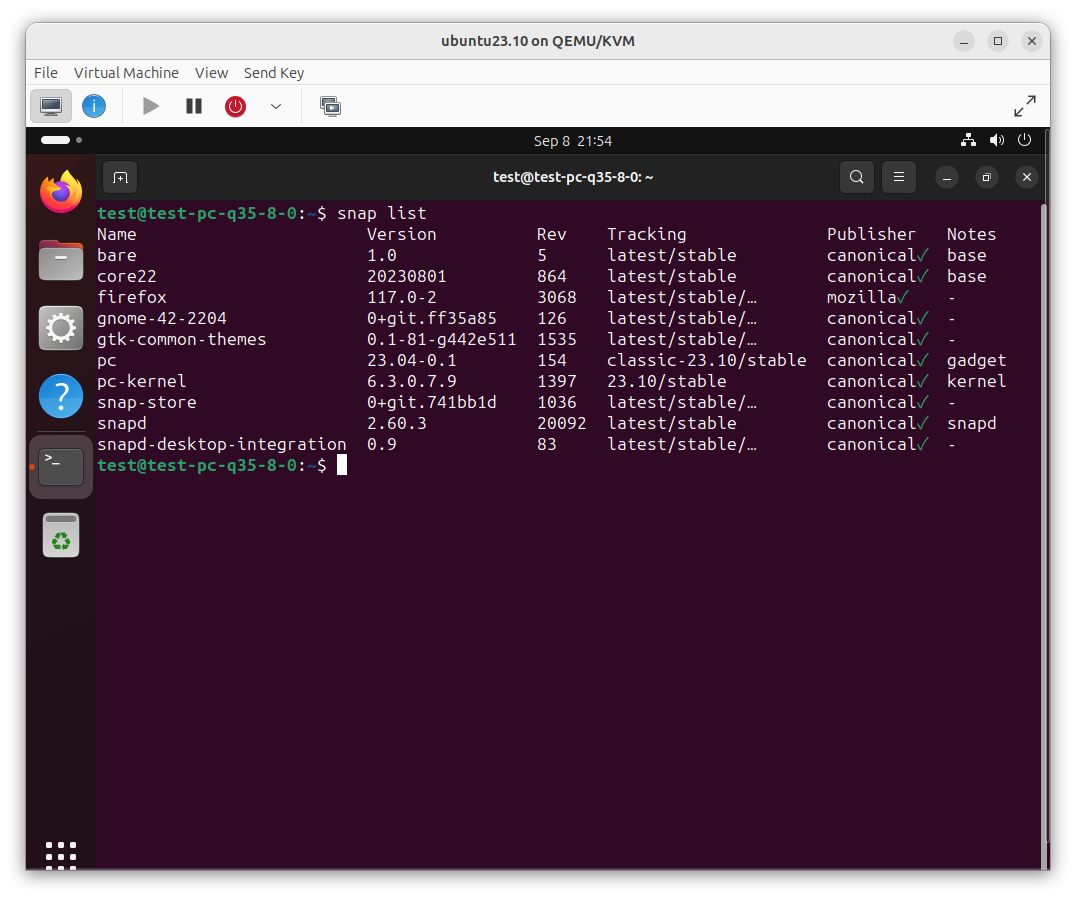

Ubuntu Security Center with snapd-based AppArmor home file access prompting preview (05:45)

- https://news.itsfoss.com/ubuntu-security-center-near-stable/

- Details the new Desktop Security Center application

- Written by the Ubuntu Desktop team - new application built using Flutter + Dart etc and published a snap

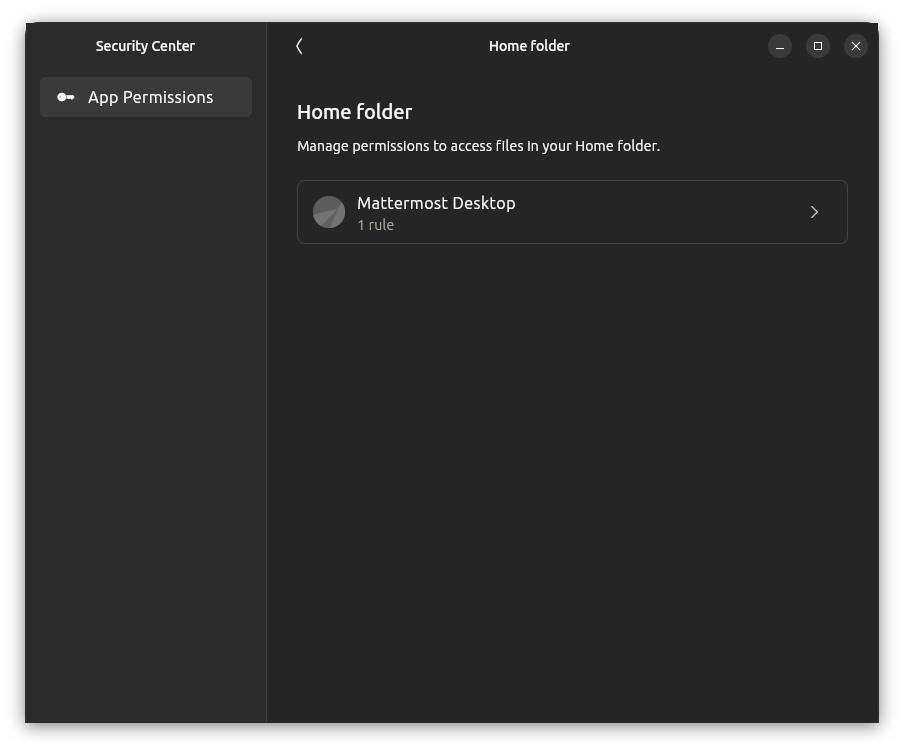

- Eventually this will allow to manage various security related things like

full-disk encryption, enabling/usage of Ubuntu Pro, Firewall control and

finally for snap permission prompting

- this last feature is the only one currently supported - has a single toggle which is to enable “snaps to ask for system permissions” - aka. snapd-based AppArmor prompting

- and then once this is enabled, allows the specific permissions to be futher fine-tuned

- What is AppArmor?

- AppArmor policies - and MAC systems in general are static - policy defined by sysadmin etc

- Not well suited for dynamic applications that are controlled by a user - like desktop / CLI etc - can’t know in advance every possible file a user may want to open in say Firefox so have to grant access to all files in home directory just in case

- Ideally system would only allow files that the user explicitly chooses - number of ways this can be done, XDG Portals one such way - using Powerbox concept pioneered in tools from the object-capability based security community like CapDesk/Polaris and Plash (principle of least-authority shell) - access is mediated by a privileged component that acts with the users whole authority to then delegate some of that authority to the application - seen say in the file-chooser dialog with portals - this runs outside of the scope of the application itself and so has the full, unrestricted access to the system to allow a file to be chosen - then the application is then just given a file-descriptor to the file to grant it the access (or similar)

- This only works in the case of applications that open files interactively - can’t allow the user to explicitly grant access to the configuration file that gets loaded from a well-known path at startup in a server application etc

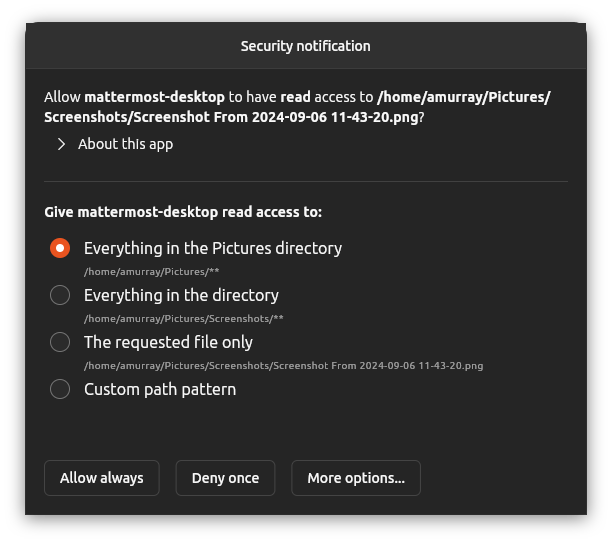

- One way to handle that case is to alert the user and explicitly prompt them for that access - and this is currently how this new prompting feature works

- When the feature is enabled, the usual broad-based access rules for the

homeinterface in snapd get tagged with a prompt attribute - any access then which would normally be allowed is instead delegated to a trusted helper application which displays a dialog to the user asking them to explicitly allow such access

- since this happens directly in the system-call path within the kernel, the application itself is unaware that this is happening - but is just suspended whilst waiting for the users response - and then assuming they grant the access it proceeds as normal (or if they deny then the application gets a permission denied error)

- Completely transparent to the application and supports any kind of file-access regardless of which API might be used (unlike portals which only support the regular file-chooser scenario)

- Allows tighter control of what files a snap is granted access to - and can be managed by the user in the Security Center later to revoke any such permission that they granted

- Has been in development for a long time, and is certainly not a new concept -

seccomp has supported this via the

seccomp_unotifyinterface - allows to delegate seccomp decisions to userspace in a very similar manner - existed since the 5.5 kernel released in January 2020 - Even before that, prototype LSMs existed which implemented this kind of functionality (https://sourceforge.net/projects/pulse-lsm/ / https://crpit.scem.westernsydney.edu.au/confpapers/CRPITV81Murray.pdf)

- Can test this now on an up-to-date 24.04 or 24.10 install

- Need to use snapd from the latest/edge channel and then install both the

desktop-security-centersnap as well as theprompting-clientsnap - Launch Security Center and toggle the option

- Need to use snapd from the latest/edge channel and then install both the

- Note this is experimental but has undergone a fair amount of internal testing

- Very exciting to see this finally available in this pre-release stage - has been talked about since at least 2018

- Give it a spin and provide feedback - I would suggest to use the link in the security center application itself for this but it is not working currently - instead report via a Github issue on the desktop-security-center project

Get in contact

Episode 235

Overview

A recent Microsoft Windows update breaks Linux dual-boot - or does it? This week we look into reports of the recent Windows patch-Tuesday update breaking dual-boot, including a deep-dive into the technical details of Secure Boot, SBAT, grub, shim and more, plus we look at a vulnerability in GNOME Shell and the handling of captive portals as well.

This week in Ubuntu Security Updates

135 unique CVEs addressed

[USN-6960-1] RMagick vulnerability

- 1 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS)

[USN-6951-2] Linux kernel (Azure) vulnerabilities

- 83 CVEs addressed in Focal (20.04 LTS)

- CVE-2022-48674

- CVE-2024-39471

- CVE-2024-39292

- CVE-2024-36270

- CVE-2024-36904

- CVE-2024-38618

- CVE-2024-36014

- CVE-2024-36941

- CVE-2024-38637

- CVE-2024-38613

- CVE-2024-36286

- CVE-2024-36902

- CVE-2024-38599

- CVE-2024-39301

- CVE-2024-39475

- CVE-2024-36954

- CVE-2024-33621

- CVE-2024-38552

- CVE-2024-36950

- CVE-2024-38582

- CVE-2024-36015

- CVE-2023-52434

- CVE-2024-38659

- CVE-2024-36940

- CVE-2024-38607

- CVE-2024-39480

- CVE-2024-38583

- CVE-2023-52882

- CVE-2024-39467

- CVE-2024-39489

- CVE-2024-38601

- CVE-2024-27019

- CVE-2023-52752

- CVE-2024-36960

- CVE-2024-38549

- CVE-2024-38567

- CVE-2024-38587

- CVE-2024-38635

- CVE-2024-38598

- CVE-2024-38612

- CVE-2024-38579

- CVE-2024-27401

- CVE-2024-36946

- CVE-2024-36017

- CVE-2022-48772

- CVE-2024-36905

- CVE-2024-35947

- CVE-2024-38381

- CVE-2024-38565

- CVE-2024-38589

- CVE-2024-36939

- CVE-2024-38661

- CVE-2024-39488

- CVE-2024-36883

- CVE-2024-38621

- CVE-2024-37353

- CVE-2024-38780

- CVE-2024-36964

- CVE-2024-38627

- CVE-2024-36971

- CVE-2024-38615

- CVE-2024-38559

- CVE-2024-31076

- CVE-2024-26886

- CVE-2024-39493

- CVE-2024-27398

- CVE-2024-36886

- CVE-2024-38633

- CVE-2024-36959

- CVE-2024-38634

- CVE-2024-38560

- CVE-2024-38558

- CVE-2023-52585

- CVE-2024-37356

- CVE-2024-35976

- CVE-2024-36919

- CVE-2024-36933

- CVE-2024-38596

- CVE-2024-39276

- CVE-2024-27399

- CVE-2024-38600

- CVE-2024-38578

- CVE-2024-36934

[USN-6961-1] BusyBox vulnerabilities

- 4 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6962-1] LibreOffice vulnerability

- 1 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6963-1] GNOME Shell vulnerability (01:03)

- 1 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

- Captive portal detection would spawn an embedded webkit browser automatically to allow user to login etc

- But the page the user gets directed to is controlled by the attacker and can contain arbitrary javascript etc

- Upstream bug report claimed could then get a reverse shell etc - not clear this is the case since would still be constrained by the webkitgtk browser so would also need a sandbox escape etc.

- This update then includes a change to both not automatically open the captive portal page (instead it will show a notification and the user needs to click that) BUT to also disable the use of the webkitgtk-based embedded browser and instead use the users regular browser

[USN-6909-3] Bind vulnerabilities

- 2 CVEs addressed in Xenial ESM (16.04 ESM)

[USN-6964-1] ORC vulnerability

- 1 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6837-2] Rack vulnerabilitie

- 3 CVEs addressed in Trusty ESM (14.04 ESM), Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS)

[USN-6966-1] Firefox vulnerabilities

- 13 CVEs addressed in Focal (20.04 LTS)

[USN-6966-2] Firefox regressions

- 13 CVEs addressed in Focal (20.04 LTS)

[USN-6951-3] Linux kernel (Azure) vulnerabilities

- 83 CVEs addressed in Bionic ESM (18.04 ESM)

- CVE-2022-48674

- CVE-2024-39471

- CVE-2024-39292

- CVE-2024-36270

- CVE-2024-36904

- CVE-2024-38618

- CVE-2024-36014

- CVE-2024-36941

- CVE-2024-38637

- CVE-2024-38613

- CVE-2024-36286

- CVE-2024-36902

- CVE-2024-38599

- CVE-2024-39301

- CVE-2024-39475

- CVE-2024-36954

- CVE-2024-33621

- CVE-2024-38552

- CVE-2024-36950

- CVE-2024-38582

- CVE-2024-36015

- CVE-2023-52434

- CVE-2024-38659

- CVE-2024-36940

- CVE-2024-38607

- CVE-2024-39480

- CVE-2024-38583

- CVE-2023-52882

- CVE-2024-39467

- CVE-2024-39489

- CVE-2024-38601

- CVE-2024-27019

- CVE-2023-52752

- CVE-2024-36960

- CVE-2024-38549

- CVE-2024-38567

- CVE-2024-38587

- CVE-2024-38635

- CVE-2024-38598

- CVE-2024-38612

- CVE-2024-38579

- CVE-2024-27401

- CVE-2024-36946

- CVE-2024-36017

- CVE-2022-48772

- CVE-2024-36905

- CVE-2024-35947

- CVE-2024-38381

- CVE-2024-38565

- CVE-2024-38589

- CVE-2024-36939

- CVE-2024-38661

- CVE-2024-39488

- CVE-2024-36883

- CVE-2024-38621

- CVE-2024-37353

- CVE-2024-38780

- CVE-2024-36964

- CVE-2024-38627

- CVE-2024-36971

- CVE-2024-38615

- CVE-2024-38559

- CVE-2024-31076

- CVE-2024-26886

- CVE-2024-39493

- CVE-2024-27398

- CVE-2024-36886

- CVE-2024-38633

- CVE-2024-36959

- CVE-2024-38634

- CVE-2024-38560

- CVE-2024-38558

- CVE-2023-52585

- CVE-2024-37356

- CVE-2024-35976

- CVE-2024-36919

- CVE-2024-36933

- CVE-2024-38596

- CVE-2024-39276

- CVE-2024-27399

- CVE-2024-38600

- CVE-2024-38578

- CVE-2024-36934

[USN-6968-1] PostgreSQL vulnerability

- 1 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6967-1] Intel Microcode vulnerabilities

- 5 CVEs addressed in Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[LSN-0106-1] Linux kernel vulnerability

- 3 CVEs addressed in

[USN-6969-1] Cacti vulnerabilities

- 10 CVEs addressed in Trusty ESM (14.04 ESM), Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

[USN-6970-1] exfatprogs vulnerability

- 1 CVEs addressed in Jammy (22.04 LTS)

[USN-6944-2] curl vulnerability

- 1 CVEs addressed in Trusty ESM (14.04 ESM), Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM)

[USN-6965-1] Vim vulnerabilities

- 5 CVEs addressed in Trusty ESM (14.04 ESM)

Goings on in Ubuntu Security Community

Reports of dual-boot Linux/Windows machines failing to boot (04:30)

- https://arstechnica.com/security/2024/08/a-patch-microsoft-spent-2-years-preparing-is-making-a-mess-for-some-linux-users/

- https://msrc.microsoft.com/update-guide/en-US/advisory/CVE-2022-2601

- https://discourse.ubuntu.com/t/sbat-self-check-failed-mitigating-the-impact-of-shim-15-7-revocation-on-the-ubuntu-boot-process-for-devices-running-windows/47378

- Microsoft released an update for Windows on 13th August 2024 - revoking old versions of grub that were susceptible to CVE-2022-2601

- How do you revoke grub?

- Secure Boot relies on each component in the boot chain verifying that the

next component is signed with a valid signature before it is then loaded

- UEFI BIOS validates shim

- shim validates grub

- grub validates kernel

- kernel validates kernel modules etc

- UEFI specification has effectively a CRL - list of hashes of binaries which shouldn’t be trusted

- BUT there is only limited space in the UEFI storage - after the original BootHole vulnerabilities revoked a huge number of grub binaries from many different distros, some devices failed to boot as the NVRAM was too full

- Microsoft and Red Hat and other maintainers of shim decided on a new scheme,

called SBAT - Secure Boot Advanced Targeting

- maintains a generation number for each component in the boot chain

- when say shim or grub gets updated to fix a bunch more security vulnerabilities, upstream bumps the generation number

- shim/grub then embeds the generation number within itself

- Signed UEFI variable then lists which generation numbers are acceptable

- shim checks the generation number of a binary (grub/fwupd etc) against this list and if it is too old refuses to load it

- Secure Boot relies on each component in the boot chain verifying that the

next component is signed with a valid signature before it is then loaded

- In Ubuntu this was patched back in Jan 2023 and was documented on the Ubuntu

Discourse - in this case we updated shim to a newer version which itself

revoked an older grub,

grub,1 - Now Microsoft’s update revokes

grub,2, ie sets the minimum generation number for grub to3 - You can inspect the SBAT policy by either directly reading the associated EFI

variable or using

mokutil --list-sbat-revocations

cat /sys/firmware/efi/efivars/SbatLevelRT-605dab50-e046-4300-abb6-3dd810dd8b23

mokutil --list-sbat-revocations

sbat,1,2023012900

shim,2

grub,3

grub.debian,4

objdump -j .sbat -s /boot/efi/EFI/ubuntu/grubx64.efi | xxd -r

sbat,1,SBAT Version,sbat,1,https://github.com/rhboot/shim/blob/main/SBAT.md

grub,4,Free Software Foundation,grub,2.12,https://www.gnu.org/software/grub/

grub.ubuntu,2,Ubuntu,grub2,2.12-5ubuntu4,https://www.ubuntu.com/

grub.peimage,2,Canonical,grub2,2.12-5ubuntu4,https://salsa.debian.org/grub-team/grub/-/blob/master/debian/patches/secure-boot/efi-use-peimage-shim.patch

rm -rf grub2-signed

mkdir grub2-signed

pushd grub2-signed >/dev/null || exit

for rel in focal jammy noble; do

mkdir $rel

pushd $rel >/dev/null || exit

pull-lp-debs grub2-signed $rel-security 1>/dev/null 2>/dev/null || pull-lp-debs grub2-signed $rel-release 1>/dev/null 2>/dev/null

dpkg-deb -x grub-efi-amd64-signed*.deb grub2-signed

echo $rel

echo -----

find . -name grubx64.efi.signed -exec objdump -j .sbat -s {} \; | tail -n +5 | xxd -r

popd >/dev/null || exit

done

popd >/dev/null

focal

-----

sbat,1,SBAT Version,sbat,1,https://github.com/rhboot/shim/blob/main/SBAT.md

grub,4,Free Software Foundation,grub,2.06,https://www.gnu.org/software/grub/

grub.ubuntu,1,Ubuntu,grub2,2.06-2ubuntu14.4,https://www.ubuntu.com/

jammy

-----

sbat,1,SBAT Version,sbat,1,https://github.com/rhboot/shim/blob/main/SBAT.md

grub,4,Free Software Foundation,grub,2.06,https://www.gnu.org/software/grub/

grub.ubuntu,1,Ubuntu,grub2,2.06-2ubuntu14.4,https://www.ubuntu.com/

noble

-----

sbat,1,SBAT Version,sbat,1,https://github.com/rhboot/shim/blob/main/SBAT.md

grub,4,Free Software Foundation,grub,2.12,https://www.gnu.org/software/grub/

grub.ubuntu,2,Ubuntu,grub2,2.12-1ubuntu7,https://www.ubuntu.com/

grub.peimage,2,Canonical,grub2,2.12-1ubuntu7,https://salsa.debian.org/grub-team/grub/-/blob/master/debian/patches/secure-boot/efi-use-peimage-shim.patch

- So if all the current LTS releases have a grub with a generation number higher

than this, why are so many machines failing to boot?

- It is not just grub that is the issue - shim itself also got revoked in the same update https://msrc.microsoft.com/update-guide/en-US/advisory/CVE-2023-40547 - so shim 15.8 (ie. 4th SBAT generation of shim) is now required

- Unfortunately, the related updates for this shim in Ubuntu are still in the process of being released - https://bugs.launchpad.net/ubuntu/+source/shim/+bug/2051151

rm -rf shim-signed

mkdir shim-signed

pushd shim-signed >/dev/null || exit

for rel in focal jammy noble; do

mkdir $rel

pushd $rel >/dev/null || exit

pull-lp-debs shim-signed $rel-security 1>/dev/null 2>/dev/null || pull-lp-debs shim-signed $rel-release 1>/dev/null 2>/dev/null

dpkg-deb -x shim-signed*.deb shim-signed

echo $rel

echo -----

find . -name shimx64.efi.signed.latest -exec objdump -j .sbat -s {} \; | tail -n +5 | xxd -r

popd >/dev/null || exit

done

popd >/dev/null

focal

-----

sbat,1,SBAT Version,sbat,1,https://github.com/rhboot/shim/blob/main/SBAT.md

shim,3,UEFI shim,shim,1,https://github.com/rhboot/shim

shim.ubuntu,1,Ubuntu,shim,15.7-0ubuntu1,https://www.ubuntu.com/

jammy

-----

sbat,1,SBAT Version,sbat,1,https://github.com/rhboot/shim/blob/main/SBAT.md

shim,3,UEFI shim,shim,1,https://github.com/rhboot/shim

shim.ubuntu,1,Ubuntu,shim,15.7-0ubuntu1,https://www.ubuntu.com/

noble

-----

sbat,1,SBAT Version,sbat,1,https://github.com/rhboot/shim/blob/main/SBAT.md

shim,4,UEFI shim,shim,1,https://github.com/rhboot/shim

shim.ubuntu,1,Ubuntu,shim,15.8-0ubuntu1,https://www.ubuntu.com/

-

only noble has a new-enough shim in the security/release pocket - both focal and jammy have the older one - but the new 4th generation shim is currently undergoing testing in the -proposed pocket and will be released next week

-

until then, if affected, need to disable secure boot in BIOS then can either wait until the new shim is released OR just reboot twice in this mode and shim will automoatically reset the SBAT policy to the previous version, allowing the older shim to still be used

-

then can re-enable Secure Boot in BIOS

-

Once new shim is released it will reinstall the new SBAT policy to revoke its older version

-

One other thing, this also means the old ISOs won’t boot either

- 24.04.1 will be released on 29th August

- upcoming 22.04.5 release will also have this new shim too

- no further ISO spins planned for 20.04 - so if you really want to install this release on new hardware, would need to disable secure boot first, do the install, then install updates to get the new shim, and re-enable secure boot

Get in contact

Episode 234

Overview

This week we take a deep dive behind-the-scenes look into how the team handled a

recent report from Snyk’s Security Lab of a local privilege escalation

vulnerability in wpa_supplicant plus we cover security updates in Prometheus

Alertmanager, OpenSSL, Exim, snapd, Gross, curl and more.

This week in Ubuntu Security Updates

185 unique CVEs addressed

[USN-6935-1] Prometheus Alertmanager vulnerability (01:08)

- 1 CVEs addressed in Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS)

- Stored XSS via the Alertmanager UI - alerts API allows to specify a URL which should be able to be called interactively by the user from the UI - an attacker instead could POST to this with arbitrary JavaScript which would then get included in the generated HTML and hence run on users when viewing the UI

- Fixed to validate this field is actually a URL before including in the generated UI page

[USN-6938-1] Linux kernel vulnerabilities (02:05)

- 31 CVEs addressed in Trusty ESM (14.04 ESM), Xenial ESM (16.04 ESM)

- CVE-2024-35978

- CVE-2024-35984

- CVE-2024-35997

- CVE-2024-26840

- CVE-2024-27020

- CVE-2023-52752

- CVE-2021-47194

- CVE-2021-46960

- CVE-2024-26884

- CVE-2024-36016

- CVE-2023-52436

- CVE-2024-36902

- CVE-2024-26886

- CVE-2023-52469

- CVE-2024-26923

- CVE-2023-52444

- CVE-2023-52620

- CVE-2021-46933

- CVE-2024-35982

- CVE-2023-52449

- CVE-2024-26934

- CVE-2024-26882

- CVE-2024-26857

- CVE-2021-46932

- CVE-2024-26901

- CVE-2024-25739

- CVE-2024-24859

- CVE-2024-24858

- CVE-2024-24857

- CVE-2023-46343

- CVE-2022-48619

- 4.4 - generic, AWS, KVM, Low Latency, Virtual

[USN-6922-2] Linux kernel vulnerabilities

- 4 CVEs addressed in Jammy (22.04 LTS)

- 6.5 lowlatency

[USN-6926-2] Linux kernel vulnerabilities

- 30 CVEs addressed in Trusty ESM (14.04 ESM), Bionic ESM (18.04 ESM)

- CVE-2023-52620

- CVE-2023-52444

- CVE-2024-26901

- CVE-2023-52449

- CVE-2024-27013

- CVE-2024-26934

- CVE-2024-35978

- CVE-2024-27020

- CVE-2023-52469

- CVE-2024-35982

- CVE-2024-35997

- CVE-2023-52443

- CVE-2024-36902

- CVE-2024-26857

- CVE-2024-36016

- CVE-2023-52436

- CVE-2023-52752

- CVE-2024-26886

- CVE-2024-35984

- CVE-2023-52435

- CVE-2024-26840

- CVE-2024-26923

- CVE-2024-26882

- CVE-2024-26884

- CVE-2024-25744

- CVE-2024-25739

- CVE-2024-24859

- CVE-2024-24858

- CVE-2024-24857

- CVE-2023-46343

- 4.15 Azure

[USN-6895-4] Linux kernel vulnerabilities

- 100 CVEs addressed in Jammy (22.04 LTS)

- CVE-2024-26802

- CVE-2024-26664

- CVE-2023-52880

- CVE-2024-26695

- CVE-2024-27416

- CVE-2024-26714

- CVE-2024-26603

- CVE-2024-26920

- CVE-2024-26736

- CVE-2024-26593

- CVE-2024-26922

- CVE-2024-26600

- CVE-2024-26702

- CVE-2024-26782

- CVE-2024-26685

- CVE-2024-26691

- CVE-2024-26734

- CVE-2024-26822

- CVE-2024-35833

- CVE-2024-26792

- CVE-2024-26674

- CVE-2024-26889

- CVE-2024-26712

- CVE-2024-26917

- CVE-2024-26919

- CVE-2023-52637

- CVE-2024-26700

- CVE-2024-26661

- CVE-2024-26926

- CVE-2023-52631

- CVE-2024-26679

- CVE-2024-26798

- CVE-2024-26667

- CVE-2024-26689

- CVE-2024-26681

- CVE-2024-26910

- CVE-2024-26828

- CVE-2024-26790

- CVE-2024-26606

- CVE-2024-26825

- CVE-2024-26677

- CVE-2024-26722

- CVE-2024-26923

- CVE-2024-26803

- CVE-2024-26898

- CVE-2023-52642

- CVE-2024-26660

- CVE-2024-26716

- CVE-2023-52645

- CVE-2024-26602

- CVE-2024-26711

- CVE-2024-26826

- CVE-2024-26601

- CVE-2024-26890

- CVE-2024-26698

- CVE-2024-26693

- CVE-2024-26665

- CVE-2024-26676

- CVE-2024-26824

- CVE-2024-26838

- CVE-2024-26720

- CVE-2024-26666

- CVE-2024-26718

- CVE-2024-26723

- CVE-2024-26675

- CVE-2024-26680

- CVE-2024-26642

- CVE-2024-26710

- CVE-2024-26696

- CVE-2024-26748

- CVE-2024-26717

- CVE-2024-26735

- CVE-2024-26916

- CVE-2024-26697

- CVE-2024-26829

- CVE-2024-26715

- CVE-2024-26694

- CVE-2024-26830

- CVE-2024-26726

- CVE-2024-26719

- CVE-2024-26820

- CVE-2024-26707

- CVE-2024-26818

- CVE-2024-26733

- CVE-2024-26688

- CVE-2023-52643

- CVE-2024-26703

- CVE-2024-26831

- CVE-2024-26789

- CVE-2024-26662

- CVE-2024-26663

- CVE-2024-26708

- CVE-2024-26659

- CVE-2024-26684

- CVE-2023-52638

- CVE-2024-24861

- CVE-2024-23307

- CVE-2024-1151

- CVE-2024-0841

- CVE-2023-6270

- 6.5 OEM

[USN-6937-1] OpenSSL vulnerabilities (03:04)

- 4 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

- Four low priority issues

- Possible UAF in

SSL_free_buffersAPI - requires an application to directly call this function - across the entire Ubuntu package ecosystem there doesn’t appear to be any packages that do this so highly unlikely to be an issue in practice - Similarly, in another rarely used function

SSL_select_next_proto- if called with an empty buffer list would read other private memory - ie OOB read - and potentially then either crash or return private data- but again this is not expected to occur in practice

- CPU-based DoS when validating long / crafted DSA keys

- simply check if using to large a modulus and error in that case

- If had set the

SSL_OP_NO_TICKEToption would possibly get into a state where the session cache would not be flushed and so would grow unbounded - memory based DoS

- Possible UAF in

[USN-6913-2] phpCAS vulnerability (04:51)

- 1 CVEs addressed in Xenial ESM (16.04 ESM)

- [USN-6913-1] phpCAS vulnerability from Episode 233

[USN-6936-1] Apache Commons Collections vulnerability (05:03)

- 1 CVEs addressed in Trusty ESM (14.04 ESM)

- Unsafe deserialisation - could allow to overwrite an object with an attacker controlled version containing code to be executed - RCE

[USN-6939-1] Exim vulnerability (05:31)

- 1 CVEs addressed in Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

- Mishandles multiline filename header and so a crafted value could bypass the MIME type extension blocking mechanism - allowing executables etc to be delivered to users

[USN-6933-1] ClickHouse vulnerabilities (06:00)

- 5 CVEs addressed in Focal (20.04 LTS)

- real-time analytics DBMS

- Mostly written in C++ so not surprisingly has various memory safety issues

- All in the the LZ4 compression codec - uses an attacker controlled 16-bit unsiged value as the offset to read from the compressed data - then this value is also used when copying the data but there is no check on the upper bound so could index outside of the data

- Also a heap buffer overflow during this same data copy since doesn’t verify the size of the destination either

[USN-6940-1] snapd vulnerabilities (06:55)

- 3 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

- 2 quite similar issues discovered by one of the engineers on the snapd team - Zeyad Gouda

- snaps are squashfs images - in general they are just mounted but certain files from the squashfs get extracted by snapd and placed on the regular file-system (ie. desktop files and icons for launchers etc) - as such, snapd would read the contents of these files and then write them out - if the file was actually a named pipe, snapd would block forever - DoS

- similarly, if the file was a symlink that pointed to an existing file on the

file-system, when opening that file (which is a symlink) snapd would read

the contents of the other file and write it out - recall these are desktop

files etc so they get written to

/usr/share/applicationswhich is world-readable - so if the symlink pointed to/etc/shadowthen you would get a copy of this written out as world-readable - so an unprivileged user on the system could then possibly escalate their privileges

- 3rd issue was AppArmor sandbox

- home interface allows snaps to read/write to your home directory

- On Ubuntu, if the bin directory exists, it gets automatically added to your PATH

- AppArmor policy for snapd took this into account and would stop snaps from writing files into this directory (and hence say creating a shell script that you would then execute later, outside of the snap sandbox)

- BUT it did not prevent a snap from creating this directory if it didn’t already exist

[USN-6941-1] Python vulnerability (11:15)

- 1 CVEs addressed in Noble (24.04 LTS)

- [USN-6928-1] Python vulnerabilities from Episode 233

[USN-6909-2] Bind vulnerabilities (11:30)

- 2 CVEs addressed in Bionic ESM (18.04 ESM)

- 2 different CPU-based DoS

- Didn’t restrict the number of resource records

for a given hostname - if an attacker could arrange so a large number of RRs

then could degrade the performace of bind due to it having to perform

expensive lookups across all the records

- introduce a limit of 100 RRs for a given name

- Removed support DNSSEC SIG(0) transaction signatures since they could be abused to perform a CPU-based DoS

- Didn’t restrict the number of resource records

for a given hostname - if an attacker could arrange so a large number of RRs

then could degrade the performace of bind due to it having to perform

expensive lookups across all the records

[USN-6943-1] Tomcat vulnerabilities (12:26)

- 5 CVEs addressed in Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS)

[USN-6942-1] Gross vulnerability (12:33)

- 1 CVEs addressed in Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

- greylisting server used in MTA setup to minimise spam - uses DNS block lists to tag mails which come from these domains as possible spam

- stack buffer overflow through the use of

strncat()during logging- would concatenate a list of parameters as string into a fixed size buffer on the stack but would pass the entire buffer size as the length argument rather than accounting for the remaining space in the buffer

- as these parameters can be controlled by an attacker can be used to either crash grossd or get RCE

[USN-6944-1] curl vulnerability (13:55)

- 1 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

- Possible OOB read through crafted ASN.1 Generalized Time field when parsing TLS certificate chain - would potentially use a negative length value and hence try calculate the length of a string but pointing to the wrong memory region - crash / info leak

- Need to specifically use the https://curl.se/libcurl/c/CURLINFO_CERTINFO.html option though to be vulnerable

[USN-6200-2] ImageMagick vulnerabilities (14:52)

- 20 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS)

- [USN-6200-1] ImageMagick vulnerabilities from Episode 202

[USN-6946-1] Django vulnerabilities (15:04)

- 4 CVEs addressed in Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

- SQL injection via crafted JSON in methods on the QuerySet class, and various DoS - one via very large inputs of Unicode characters in certain input fields, another through floatformat template filter - would use a large amount of memory if given a number in scientific notation with a large exponent

[USN-6945-1] wpa_supplicant and hostapd vulnerability (15:42)

- 1 CVEs addressed in Trusty ESM (14.04 ESM), Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

- Possible privilege escalation through abuse of DBus method to get

wpa_supplicantto load an attacker controlled shared object into memory

Goings on in Ubuntu Security Community

Discussion of CVE-2024-5290 in wpa_supplicant (16:10)

- Reported privately to us by Rory McNamara from Snyk as part of a larger disclosure of various security issues they had found

- Issue specific to Debian and Ubuntu - includes patch to the dbus policy for

wpa_supplicantto allow various methods to be called by users in the netdev group- historical hangover before we had network manager etc to do this

- nowadays, Network Manager allows the user who is logged in to control access to wireless networks etc

- historically though, Debian had the netdev group instead - so you would add your user to this group to allow them to configure network settings etc

- so makes sense to allow that group to control

wpa_supplicantvia its dbus interface

- DBus API includes a method called

CreateInterface- takes an argument called

ConfigFilewhich specifies the path to a configuration file using the format of wpa_supplicant.conf - config file includes a parameter for

opensc_engine_pathor similarly PKCS11 engine and module paths - these are shared object which then get dynamically loaded into memory by

wpa_supplicant

- takes an argument called

- hence could overwrite existing functions and therefore get code execution as

root - since

wpa_supplicantruns as root - upstream actually includes a patch to hard-code these values at compile-time and not allow them to be specified in the config file BUT we don’t use this in Ubuntu since it was only introduced recently (so not all Ubuntu releases include it) but regardless, we want to support setups where these modules may live in different locations

- Discussed how to possibly fix this in LP: #2067613

- Is not an issue for upstream since the upstream policy only allows root to use this dbus method so there is no privilege escalation

- Could allow-list various paths but was not clear which ones to use

- Lukas from Foundations team (and maintainer of Netplan) tried searching for any users of these config parameters but couldn’t find anything in the archive

- However, users may still be configuring things so don’t want to break their setups

- Or could tighten up the DBus policy for the netdev group to NOT include

access to this method - but this may break existing things that are using

the netdev group and this method

- Marc from our team then tried looking for anything in Ubuntu which used

the

wpa_supplicantDBus interface - none appear to make use of the netdev group - Considered dropping support entirely for this feature which allows the netdev group access since in general this should be done with NetworkManager or netplan nowadays anyway

- But this is such a long-standing piece of functionality it wasn’t clear what the possible regression potential would be

- Marc from our team then tried looking for anything in Ubuntu which used

the

- Or we could patch

wpa_supplicantto check that the specified module was owned by root - this should then stop an unprivileged user from creating their own module and specifying it as it wouldn’t be owned by root- This looked promising and a patch was drafted and tested against the proof-of-concept and was able to block it

- However, Rory came back with some excellent research showing it could be

bypassed by some quite creative use of a crafted FUSE filesystem in

combination with overlayfs inside an unprivileged user namespace

(ie. unpriv userns strikes again)

- create a FUSE which lies about the uid of a file to say it is 0 (root)

- mount this as an unprivileged user

- create a new user and mount namespace through unshare

- within that (since you are “root”) mount an overlay filesystem using the FUSE fs

- Specify the path to this file using the special

rootlink inside the proc filesystem - which points to the actual root directory of that process - and since the FUSE fs lies about the UID it looks like root owned

- So at this point we were running out of ideas - Luci from our team suggested

manually walking the path to the specified file akin to how

realpathworks (which should block the ability to read it via the proc symlink)- but this was considered too complicated and possibly prone to a TOCTOU race

- Finally Marc proposed to simply allow-list anything under

/usr/lib- since anything installed from the archive would live here - in this case we simply callrealpath()directly on the provided path name and if it doesn’t start with /usr/lib then deny loading of the module - No way to race against this and would seem to have the least chance of regression

- Yes if using a non-standard location like

/optwould now fail BUT if you can write to/optthen you can write to somewhere in/usr/lib- so is easy to fix as well

- Yes if using a non-standard location like

- Was tested significantly both with a dummy PKCS11 provider as well as a real one to ensure works as expected (both to prevent the exploit but also to work as intended)

- Eventual solution then was both secure but also would appear to minimise the

chance of regressions

- None reported so far anyway ;)

- Demonstrates the careful balance between security and possible regressions

- Also the team effort of both the security team and other Ubuntu teams

- Thanks to Marc, Luci, Mark E, and Sudhakar on our side, and Lukas from Foundations, but most importantly to Rory from Snyk for both reporting the vuln but also in their help evaluating the various proposed solutions

Get in contact

Episode 233

Overview

This week we take a look at the recent Crowdstrike outage and what we can learn from it compared to the testing and release process for security updates in Ubuntu, plus we cover details of vulnerabilities in poppler, phpCAS, EDK II, Python, OpenJDK and one package with over 300 CVE fixes in a single update.

This week in Ubuntu Security Updates

462 unique CVEs addressed

[USN-6915-1] poppler vulnerability (01:35)

- 1 CVEs addressed in Jammy (22.04 LTS), Noble (24.04 LTS)

- Installed by default in Ubuntu due to use by cups

- PDF document format describes a Catalog which has a tree of destinations - essentially hyperlinks within the document. These can be either a page number etc or a named location within the document. If open a crafted document with a missing name property for a destination - name would then be NULL and would trigger a NULL ptr deref -> crash -> DoS

[USN-6913-1] phpCAS vulnerability (02:26)

- 1 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS)

- Authentication library for PHP to allow PHP applications to authenticates users against a Central Authentication Server (ie. SSO).

- When used for SSO, a client who is trying to use a web application gets directed to the CAS. The CAS then authenticates the user and returns a service ticket - the client then needs to validate this ticket with the CAS since it could have possibly been injected via the application. To do this, pass the ticket along with its own service identifier to CAS - and if this succeeds is provided with the details of which user was authenticated etc.

- For clients, previously would use HTTP headers to determine where the CAS server was to authenticate the ticket. Since these can be manipulated by a malicious application, could essentially redirect the client to send the ticket to the attacker who could then use that to impersonate the client and login as the user.

- Fix requires a refactor to include an additional API parameter which specifies either a fixed CAS server for the client to use, or a mechanism to auto-discover this in a secure way - either way, applications using phpCAS now need to be updated.

[USN-6914-1] OCS Inventory vulnerability

- 1 CVEs addressed in Jammy (22.04 LTS)

- Same as above since has an embedded copy of phpCAS

[USN-6916-1] Lua vulnerabilities (04:44)

- 2 CVEs addressed in Jammy (22.04 LTS)

- Heap buffer over-read and a possible heap buffer over-flow via recursive error handling - looks like both require to be interpreting malicious code

[USN-6920-1] EDK II vulnerabilities (05:04)

- 5 CVEs addressed in Xenial ESM (16.04 ESM), Bionic ESM (18.04 ESM)

- UEFI firmware implementation in qemu etc

- Various missing bounds checks -> stack and heap buffer overflows -> DoS or code execution in BIOS context -> privilege escalation within VM

[USN-6928-1] Python vulnerabilities (05:49)

- 2 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS)

- Memory race in the ssl module - can call into various functions to get certificate information at the same time as certs are loaded if happening to be doing a TLS handshake with a certificate directory configured - all via different threads. Python would then possibly return inconsistent results leading to various issues

- Occurs since ssl module is implemented in C to interface with openssl and did not properly lock access to the certificate store

[USN-6929-1, USN-6930-1] OpenJDK 8 and OpenJDK 11 vulnerabilities (06:52)

- 6 CVEs addressed in Bionic ESM (18.04 ESM), Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

- Latest upstream releases of OpenJDK 8 and 11

- 8u422-b05-1, 11.0.24+8

- Fixes various issues in the Hotspot and Concurrency components

[USN-6931-1, USN-6932-1] OpenJDK 17 and OpenJDK 21 vulnerabilities (07:11)

- 5 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

- Latest upstream releases of OpenJDK 17 and 21

- 17.0.12+7, 21.0.4+7

- Fixes the same issues in the Hotspot component

[USN-6934-1] MySQL vulnerabilities (07:29)

- 15 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS), Noble (24.04 LTS)

- Also latest upstream release

- 8.0.39

- Bug fixes, possible new features and incompatible changes - consult release notes:

[USN-6917-1] Linux kernel vulnerabilities (07:57)

- 156 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS)

- CVE-2024-35933

- CVE-2024-35910

- CVE-2024-27393

- CVE-2024-27004

- CVE-2024-27396

- CVE-2024-36029

- CVE-2024-26955

- CVE-2024-35976

- CVE-2024-26966

- CVE-2024-26811

- CVE-2024-35871

- CVE-2023-52699

- CVE-2024-35796

- CVE-2024-35851

- CVE-2024-35885

- CVE-2024-35813

- CVE-2024-35789

- CVE-2024-35825

- CVE-2024-26994

- CVE-2024-35815

- CVE-2024-27395

- CVE-2024-26981

- CVE-2024-35886

- CVE-2024-26931

- CVE-2024-35791

- CVE-2024-35849

- CVE-2024-35978

- CVE-2024-35895

- CVE-2024-35918

- CVE-2024-35902

- CVE-2024-26926

- CVE-2024-35934

- CVE-2024-35807

- CVE-2024-35805

- CVE-2024-36008

- CVE-2024-26950

- CVE-2024-26973

- CVE-2024-35898

- CVE-2024-35955

- CVE-2024-36004

- CVE-2024-36006

- CVE-2024-35990

- CVE-2024-35944

- CVE-2024-36007

- CVE-2024-35896

- CVE-2024-35819

- CVE-2024-26988

- CVE-2024-35872

- CVE-2024-36025

- CVE-2024-26957

- CVE-2024-35897

- CVE-2024-27016

- CVE-2024-35806

- CVE-2024-35927

- CVE-2022-48808

- CVE-2024-35960

- CVE-2024-27001

- CVE-2024-35970

- CVE-2024-35988

- CVE-2024-36005

- CVE-2024-35821

- CVE-2024-35925

- CVE-2024-26961

- CVE-2024-35817

- CVE-2024-26922

- CVE-2024-26976

- CVE-2024-35899

- CVE-2024-35984

- CVE-2024-26929

- CVE-2024-27018

- CVE-2024-35907

- CVE-2024-35884

- CVE-2023-52488

- CVE-2024-35982

- CVE-2024-26934

- CVE-2024-26935

- CVE-2024-35973

- CVE-2024-26958

- CVE-2024-27008

- CVE-2024-35809

- CVE-2024-26951

- CVE-2024-35900

- CVE-2024-35888

- CVE-2024-26965

- CVE-2024-26828

- CVE-2024-35935

- CVE-2024-35857

- CVE-2024-26642

- CVE-2024-26989

- CVE-2024-35893

- CVE-2024-35877

- CVE-2024-27009

- CVE-2024-35785

- CVE-2024-35905

- CVE-2024-27020

- CVE-2024-35901

- CVE-2024-26956

- CVE-2024-26977

- CVE-2024-26969

- CVE-2024-26810

- CVE-2024-26813

- CVE-2024-35930

- CVE-2024-26970

- CVE-2024-26687

- CVE-2024-27015

- CVE-2024-35847

- CVE-2024-26999

- CVE-2024-35940

- CVE-2024-35890

- CVE-2024-26814

- CVE-2024-35958

- CVE-2024-35804

- CVE-2024-26629

- CVE-2024-26974

- CVE-2023-52880

- CVE-2024-26937

- CVE-2024-35922

- CVE-2024-35854

- CVE-2024-27013

- CVE-2024-35853

- CVE-2024-27000

- CVE-2024-35989

- CVE-2024-35852

- CVE-2024-35823

- CVE-2024-36020

- CVE-2024-36031

- CVE-2024-26923

- CVE-2024-26654

- CVE-2024-26925

- CVE-2024-35855

- CVE-2024-35997

- CVE-2024-35822

- CVE-2024-27019

- CVE-2024-35938

- CVE-2024-35915

- CVE-2024-35912

- CVE-2024-35936

- CVE-2024-35969

- CVE-2024-27059

- CVE-2024-26964

- CVE-2024-27437

- CVE-2024-26960

- CVE-2024-35950

- CVE-2024-26817

- CVE-2024-26984

- CVE-2024-26812

- CVE-2024-35879

- CVE-2024-26996

- CVE-2024-26993

- CVE-2024-25739

- CVE-2024-24861

- CVE-2024-24859

- CVE-2024-24858

- CVE-2024-24857

- CVE-2024-23307

- CVE-2022-38096

- 5.15 - Azure + FDE (CVM)

[USN-6918-1] Linux kernel vulnerabilities

- 180 CVEs addressed in Noble (24.04 LTS)

- CVE-2024-24859

- CVE-2024-24858

- CVE-2024-24857

- CVE-2024-35932

- CVE-2024-35937

- CVE-2024-27006

- CVE-2024-35960

- CVE-2024-27011

- CVE-2024-35924

- CVE-2024-35946

- CVE-2024-35942

- CVE-2024-35921

- CVE-2024-35908

- CVE-2024-26811

- CVE-2024-27008

- CVE-2024-35871

- CVE-2024-36019

- CVE-2024-35965

- CVE-2024-35973

- CVE-2024-26981

- CVE-2024-27009

- CVE-2024-27019

- CVE-2024-36022

- CVE-2024-35910

- CVE-2024-35907

- CVE-2024-35860

- CVE-2024-35951

- CVE-2024-26924

- CVE-2024-26921

- CVE-2024-35901

- CVE-2024-35972

- CVE-2024-35889

- CVE-2024-27017

- CVE-2024-35913

- CVE-2024-35936

- CVE-2024-36025

- CVE-2024-35961

- CVE-2024-35977

- CVE-2024-35902

- CVE-2024-26817

- CVE-2024-26994

- CVE-2023-52699

- CVE-2024-35868

- CVE-2024-35899

- CVE-2024-35888

- CVE-2024-26995

- CVE-2024-35865

- CVE-2024-26993

- CVE-2024-35863

- CVE-2024-35970

- CVE-2024-35943

- CVE-2024-35875

- CVE-2024-35978

- CVE-2024-27005

- CVE-2024-35909

- CVE-2024-35957

- CVE-2024-35950

- CVE-2024-26986

- CVE-2024-36020

- CVE-2024-35952

- CVE-2024-26928

- CVE-2024-35878

- CVE-2024-35954

- CVE-2024-26998

- CVE-2024-36024

- CVE-2024-26936

- CVE-2024-27018

- CVE-2024-35900

- CVE-2024-35940

- CVE-2024-35985

- CVE-2024-35944

- CVE-2024-35958

- CVE-2024-35864

- CVE-2024-35975

- CVE-2024-27002

- CVE-2024-36018

- CVE-2024-35974

- CVE-2024-26926

- CVE-2024-35877

- CVE-2024-35916

- CVE-2024-35934

- CVE-2024-35930

- CVE-2024-35898

- CVE-2024-35893

- CVE-2024-35887

- CVE-2024-35929

- CVE-2024-26923

- CVE-2024-35911

- CVE-2024-35919

- CVE-2024-26984

- CVE-2024-27016

- CVE-2024-35926

- CVE-2024-35872

- CVE-2024-35922

- CVE-2024-27007

- CVE-2024-35931

- CVE-2024-36021

- CVE-2024-35953

- CVE-2024-27004

- CVE-2024-27001

- CVE-2024-27014

- CVE-2024-35866

- CVE-2024-27021

- CVE-2024-35870

- CVE-2024-35925

- CVE-2024-35891

- CVE-2024-26982

- CVE-2024-35879

- CVE-2024-35979

- CVE-2024-35912

- CVE-2024-35982

- CVE-2024-27015

- CVE-2024-26985

- CVE-2024-35861

- CVE-2024-35939

- CVE-2024-27003

- CVE-2024-35945

- CVE-2024-35967

- CVE-2024-35966

- CVE-2024-26983

- CVE-2024-35894

- CVE-2024-35896

- CVE-2024-36027

- CVE-2024-35895

- CVE-2024-26987

- CVE-2024-35873

- CVE-2024-26996

- CVE-2024-26991

- CVE-2024-27013

- CVE-2024-36026

- CVE-2024-26922

- CVE-2024-35897

- CVE-2024-35917

- CVE-2024-35968

- CVE-2024-35890

- CVE-2024-35904

- CVE-2024-35867

- CVE-2024-35933

- CVE-2024-35918

- CVE-2024-35920

- CVE-2024-26997

- CVE-2024-35981

- CVE-2024-35963

- CVE-2024-26989

- CVE-2024-26999

- CVE-2024-35892

- CVE-2024-27010

- CVE-2024-26992

- CVE-2024-35935

- CVE-2024-27022

- CVE-2024-35971

- CVE-2024-35956

- CVE-2024-35862

- CVE-2024-35969

- CVE-2024-27012

- CVE-2024-26990

- CVE-2024-35885

- CVE-2024-26925

- CVE-2024-35905

- CVE-2024-35914

- CVE-2024-35884

- CVE-2024-35927

- CVE-2024-35882

- CVE-2024-26980

- CVE-2024-35964

- CVE-2024-35955

- CVE-2024-27020

- CVE-2024-35980

- CVE-2024-35903

- CVE-2024-35976

- CVE-2024-35886

- CVE-2024-35883

- CVE-2024-35959

- CVE-2024-35915

- CVE-2024-35880

- CVE-2024-27000

- CVE-2024-35938

- CVE-2024-35869

- CVE-2024-36023

- CVE-2024-26988

- 6.8 - Oracle

[USN-6919-1] Linux kernel vulnerabilities

- 304 CVEs addressed in Jammy (22.04 LTS)

- CVE-2024-35976

- CVE-2023-52880

- CVE-2024-35849

- CVE-2024-27073

- CVE-2024-35934

- CVE-2024-27038

- CVE-2024-26973

- CVE-2024-35853

- CVE-2024-27047

- CVE-2024-36007

- CVE-2024-27024

- CVE-2024-26750

- CVE-2024-26833

- CVE-2024-26960

- CVE-2024-26929

- CVE-2023-52488

- CVE-2024-27417

- CVE-2024-26922

- CVE-2024-26863

- CVE-2024-35890

- CVE-2024-27015

- CVE-2024-27395

- CVE-2024-26779

- CVE-2024-27419

- CVE-2024-27013

- CVE-2024-26981

- CVE-2024-26798

- CVE-2024-26895

- CVE-2024-35922

- CVE-2023-52699

- CVE-2024-26883

- CVE-2024-35871

- CVE-2024-27410

- CVE-2024-26884

- CVE-2024-26885

- CVE-2024-27074

- CVE-2024-26751

- CVE-2024-26857

- CVE-2024-26848

- CVE-2024-26901

- CVE-2024-35844

- CVE-2024-35809

- CVE-2024-26687

- CVE-2024-35988

- CVE-2024-26835

- CVE-2024-26764

- CVE-2024-27020

- CVE-2024-35907

- CVE-2024-35886

- CVE-2024-27077

- CVE-2024-26787

- CVE-2024-26950

- CVE-2024-26974

- CVE-2024-35905

- CVE-2024-27008

- CVE-2024-26744

- CVE-2024-35935

- CVE-2024-26988

- CVE-2024-26748

- CVE-2024-26776

- CVE-2024-26907

- CVE-2024-27053

- CVE-2024-35970

- CVE-2024-35950

- CVE-2024-35854

- CVE-2024-35822

- CVE-2024-26961

- CVE-2024-26733

- CVE-2024-26773

- CVE-2024-27390

- CVE-2024-35888

- CVE-2024-36029

- CVE-2024-26643

- CVE-2024-35821

- CVE-2024-35819

- CVE-2024-26809

- CVE-2024-35984

- CVE-2024-26851

- CVE-2024-35940

- CVE-2024-26654

- CVE-2024-35910

- CVE-2024-26891

- CVE-2024-26793

- CVE-2024-35938

- CVE-2024-26736

- CVE-2024-26583

- CVE-2024-26870

- CVE-2024-35828

- CVE-2024-35885

- CVE-2024-35958

- CVE-2024-26889

- CVE-2024-35899

- CVE-2024-26839

- CVE-2024-26894

- CVE-2024-26937

- CVE-2024-35925

- CVE-2024-35933

- CVE-2024-26771

- CVE-2024-26923

- CVE-2024-26852

- CVE-2024-26924

- CVE-2024-26872

- CVE-2024-26774

- CVE-2024-35930

- CVE-2024-27065

- CVE-2024-26993

- CVE-2024-27034

- CVE-2024-36020

- CVE-2024-26802

- CVE-2024-26976

- CVE-2022-48808

- CVE-2024-35847

- CVE-2024-26996

- CVE-2024-36025

- CVE-2023-52652

- CVE-2024-27403

- CVE-2023-52447

- CVE-2024-27037

- CVE-2024-27413

- CVE-2024-26749

- CVE-2024-26956

- CVE-2024-26958

- CVE-2024-26754

- CVE-2024-26812

- CVE-2024-26772

- CVE-2024-27436

- CVE-2024-27437

- CVE-2024-35912

- CVE-2024-35805

- CVE-2024-26845

- CVE-2024-35990

- CVE-2024-35791

- CVE-2024-26906

- CVE-2024-27039

- CVE-2024-26915

- CVE-2024-26970

- CVE-2024-26782

- CVE-2024-26813

- CVE-2023-52645

- CVE-2024-26935

- CVE-2024-27076

- CVE-2024-35823

- CVE-2024-26743

- CVE-2024-26846

- CVE-2024-26811

- CVE-2024-26989

- CVE-2024-26642

- CVE-2024-26659

- CVE-2024-26766

- CVE-2024-27393

- CVE-2024-26859

- CVE-2024-35898

- CVE-2024-35893

- CVE-2023-52640

- CVE-2024-26795

- CVE-2024-27009

- CVE-2024-26791

- CVE-2024-27043

- CVE-2024-26934

- CVE-2024-27051

- CVE-2024-26804

- CVE-2024-26878

- CVE-2024-27030

- CVE-2024-27000

- CVE-2024-26777

- CVE-2024-35825

- CVE-2024-27415

- CVE-2024-27001

- CVE-2024-27004

- CVE-2024-26769

- CVE-2024-26816

- CVE-2024-35807

- CVE-2024-35900

- CVE-2024-35851

- CVE-2024-27052

- CVE-2024-26805

- CVE-2024-35804

- CVE-2024-35944

- CVE-2024-35895

- CVE-2024-26897

- CVE-2024-27045

- CVE-2024-26814

- CVE-2024-26801

- CVE-2024-26874

- CVE-2024-35982

- CVE-2024-35915

- CVE-2024-26820

- CVE-2024-26603

- CVE-2024-35997

- CVE-2024-26688

- CVE-2024-27054

- CVE-2024-26828

- CVE-2024-35857

- CVE-2023-52662

- CVE-2024-35989

- CVE-2024-36005

- CVE-2024-35785

- CVE-2024-27396

- CVE-2024-35884

- CVE-2023-52650

- CVE-2024-26882

- CVE-2024-26879

- CVE-2024-26898

- CVE-2024-27388

- CVE-2024-35879

- CVE-2024-35918

- CVE-2024-35978

- CVE-2024-26585

- CVE-2024-35872

- CVE-2023-52497

- CVE-2024-26778

- CVE-2024-26999

- CVE-2024-27046

- CVE-2023-52434

- CVE-2024-26862

- CVE-2024-26810

- CVE-2024-35796

- CVE-2024-35960

- CVE-2024-35969

- CVE-2024-26966

- CVE-2024-26856

- CVE-2024-35936

- CVE-2024-35955

- CVE-2024-26763

- CVE-2024-35806

- CVE-2024-27059

- CVE-2024-35855

- CVE-2024-36008

- CVE-2024-27075

- CVE-2023-52620

- CVE-2024-26931

- CVE-2024-35813

- CVE-2024-26788

- CVE-2024-27412

- CVE-2024-26861

- CVE-2024-36004

- CVE-2024-26951

- CVE-2024-26903

- CVE-2024-26584

- CVE-2024-35877

- CVE-2024-26792

- CVE-2024-27416

- CVE-2024-27432

- CVE-2024-26651

- CVE-2024-35852

- CVE-2024-35973

- CVE-2023-52656

- CVE-2024-26965

- CVE-2024-26969

- CVE-2024-26840

- CVE-2024-26817

- CVE-2024-27028

- CVE-2024-26752

- CVE-2024-27016

- CVE-2023-52641

- CVE-2024-35789

- CVE-2024-27078

- CVE-2024-26994

- CVE-2024-26629

- CVE-2024-26803

- CVE-2024-26977

- CVE-2024-35830

- CVE-2024-27019

- CVE-2024-26957

- CVE-2024-36006

- CVE-2024-35817

- CVE-2024-26601

- CVE-2024-35845

- CVE-2024-35897

- CVE-2024-27414

- CVE-2024-26855

- CVE-2024-26877

- CVE-2024-35829

- CVE-2024-35896

- CVE-2024-26875

- CVE-2024-27405

- CVE-2024-26747

- CVE-2023-52644

- CVE-2024-26881

- CVE-2024-26735

- CVE-2024-26843

- CVE-2024-26926

- CVE-2024-26880

- CVE-2024-26964

- CVE-2024-27044

- CVE-2024-26737

- CVE-2024-27431

- CVE-2024-26955

- CVE-2024-26790

- CVE-2024-26925

- CVE-2024-26838

- CVE-2024-26984

- CVE-2024-25739

- CVE-2024-24861

- CVE-2024-24859

- CVE-2024-24858

- CVE-2024-24857

- CVE-2024-23307

- CVE-2024-22099

- CVE-2024-21823

- CVE-2024-0841

- CVE-2023-7042

- CVE-2023-6270

- CVE-2022-38096

- 5.15 - Raspi

[USN-6922-1] Linux kernel vulnerabilities

- 4 CVEs addressed in Jammy (22.04 LTS)

- 6.5 - NVIDIA

[USN-6923-1, USN-6923-2] Linux kernel vulnerabilities

- 6 CVEs addressed in Focal (20.04 LTS), Jammy (22.04 LTS)